Modern software teams face a paradox: they have more data than ever about their development process, yet visibility into the actual flow of work—from an idea in a backlog to code running in production—remains frustratingly fragmented. Value stream management tools exist to solve this problem.

Value stream management (VSM) originated in lean manufacturing, where it helped factories visualize and optimize the flow of materials. In software delivery, the concept has evolved dramatically. Today, value stream management tools are platforms that connect data across planning, coding, review, CI/CD, and operations to optimize flow from idea to production. They aggregate signals from disparate systems—Jira, GitHub, GitLab, Jenkins, and incident management platforms—into a unified view that reveals where work gets stuck, how long each stage takes, and what’s actually reaching customers.

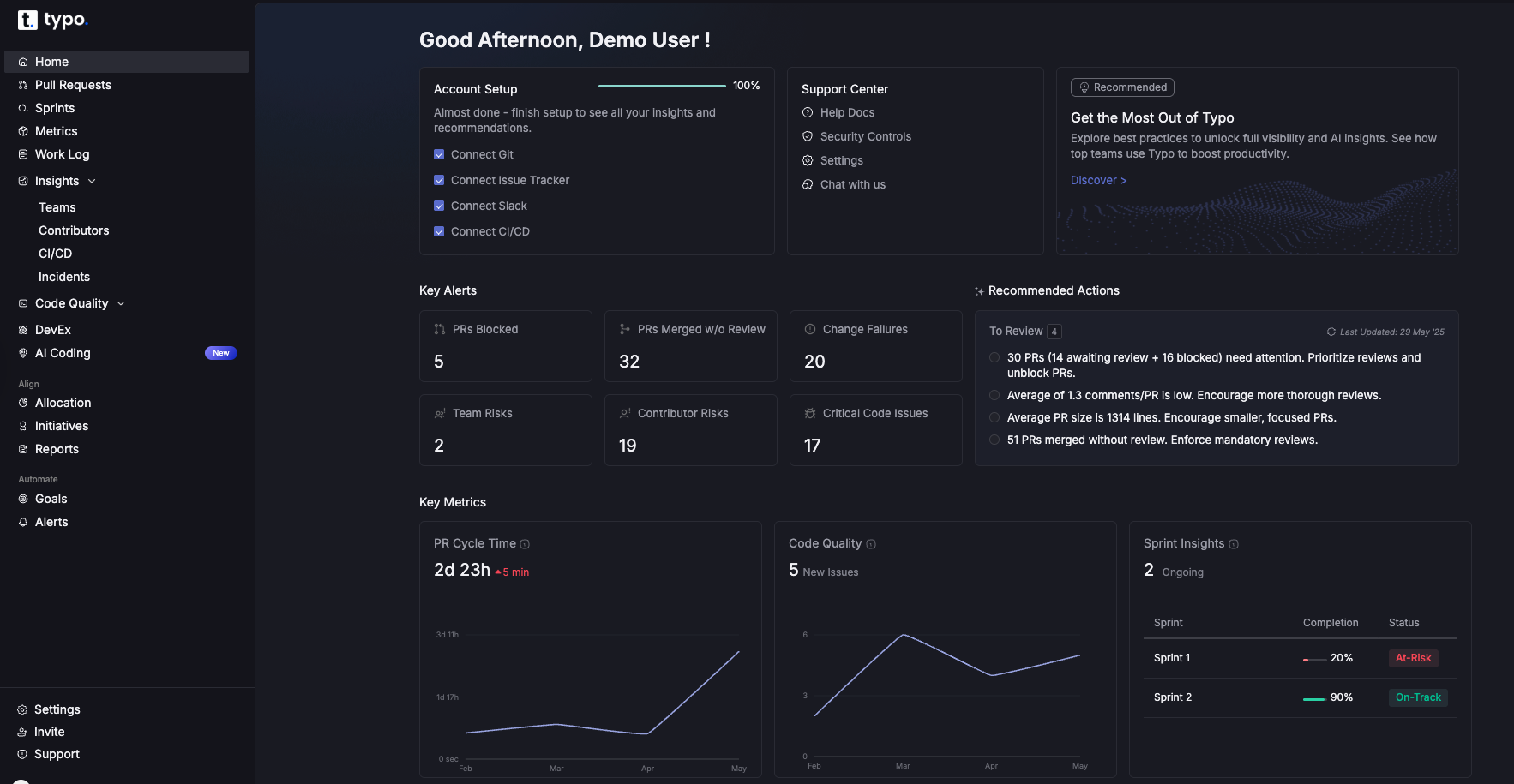

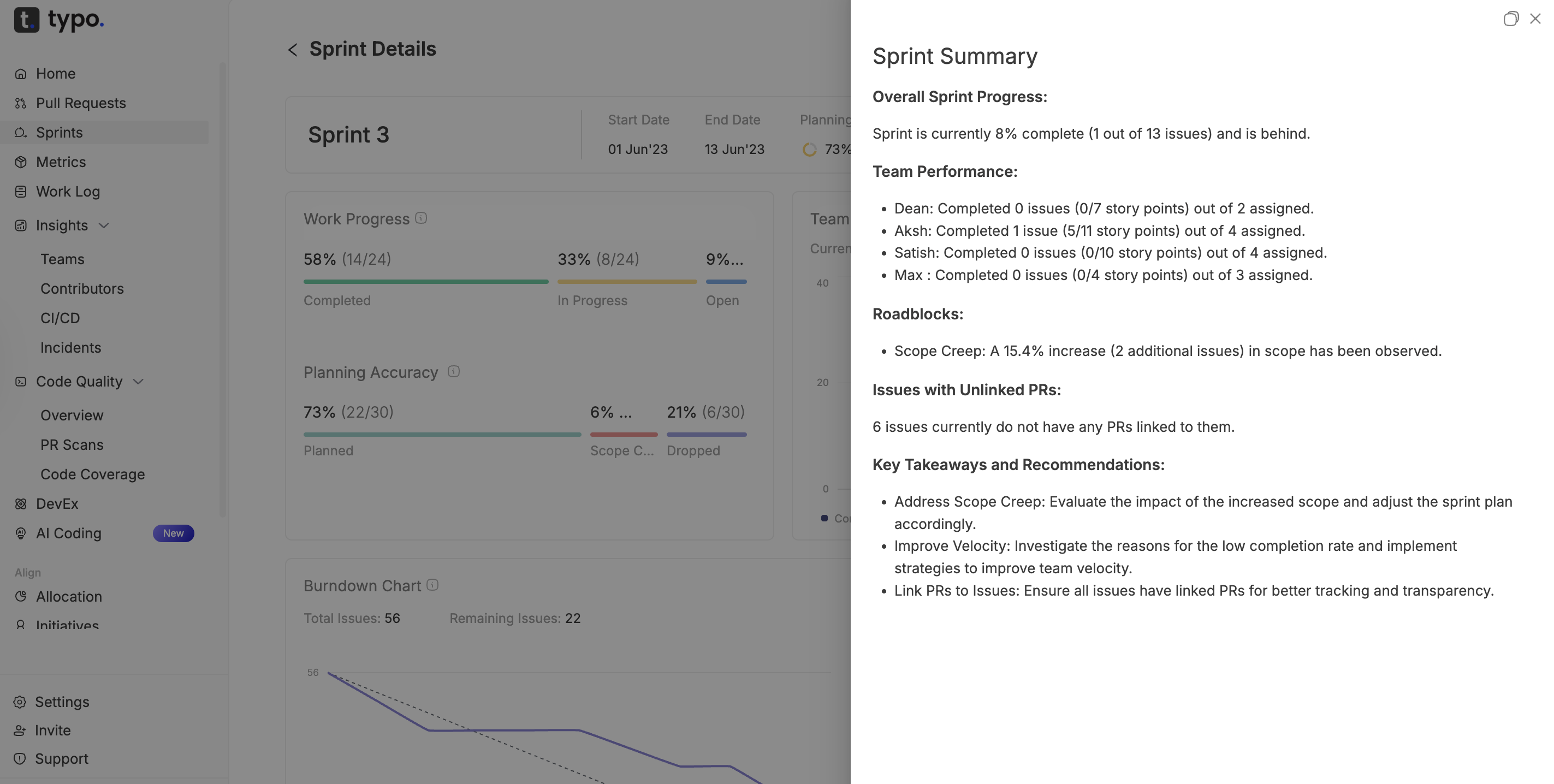

Unlike simple dashboards that display metrics in isolation, value stream management solutions provide end to end visibility across the entire software delivery lifecycle. They surface flow metrics, identify bottlenecks, and deliver actionable insights that engineering leaders can use to make data driven decision making a reality rather than an aspiration. Typo is an AI-powered engineering intelligence platform that functions as a value stream management tool for teams using GitHub, GitLab, Jira, and CI/CD systems—combining SDLC visibility, AI-based code reviews, and developer experience insights in a single platform.

Why does this matter now? Several forces have converged to make value stream management VSM essential for engineering organizations:

Key takeaways:

The most mature software organizations have shifted their focus from “shipping features” to “delivering measurable customer value.” This distinction matters. A team can deploy code twenty times a day, but if those changes don’t improve customer satisfaction, reduce churn, or drive revenue, the velocity is meaningless.

Value stream management tools bridge this gap by linking engineering work—issues, pull requests, deployments—to business outcomes like activation rates, NPS scores, and ARR impact. Through integrations with project management systems and tagging conventions, stream management platforms can categorize work by initiative, customer segment, or strategic objective. This visibility transforms abstract OKRs into trackable delivery progress.

With Typo, engineering leaders can align initiatives with clear outcomes. For example, a platform team might commit to reducing incident-driven work by 30% over two quarters. Typo tracks the flow of incident-related tickets versus roadmap features, showing whether the team is actually shifting its time toward value creation rather than firefighting.

Centralizing efforts across the entire process:

The real power emerges when teams use VSM tools to prioritize customer-impacting work over low-value tasks. When analytics reveal that 40% of engineering capacity goes to maintenance work that doesn’t affect customer experience, leaders can make informed decisions about where to invest.

Example: A mid-market SaaS company tracked their value streams using a stream management process tied to customer activation. By measuring the cycle time of features tagged “onboarding improvement,” they discovered that faster value delivery—reducing average time from PR merge to production from 4 days to 12 hours—correlated with a 15% improvement in 30-day activation rates. The visibility made the connection between engineering metrics and business outcomes concrete.

How to align work with customer value:

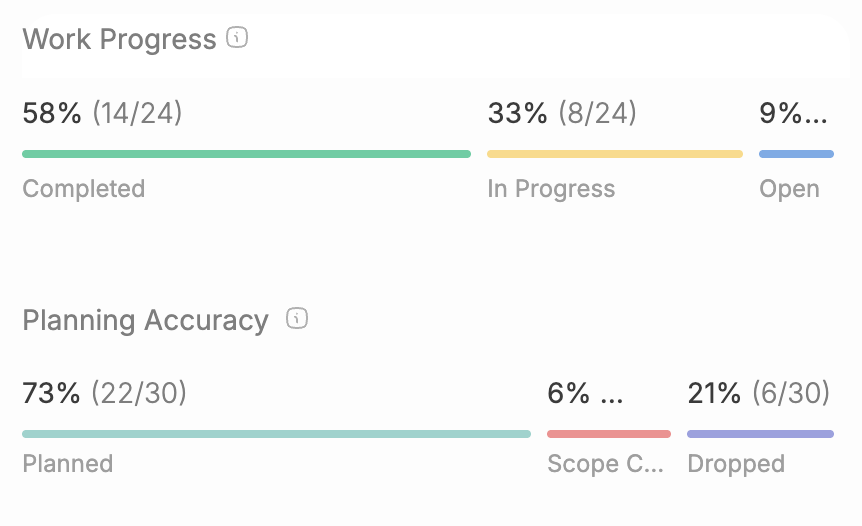

A value stream dashboard presents a single-screen view mapping work from backlog to production, complete with status indicators and key metrics at each stage. Think of it as a real time data feed showing exactly where work sits right now—and where it’s getting stuck.

The most effective flow metrics dashboards show metrics across the entire development process: cycle time (how long work takes from start to finish), pickup time (how long items wait before someone starts), review time, deployment frequency, change failure rate, and work-in-progress across stages. These aren’t vanity metrics; they’re the vital signs of your delivery process.

Typo’s dashboards aggregate data from Jira (or similar planning tools), Git platforms like GitHub and GitLab, and CI/CD systems to reveal bottlenecks in real time. When a pull request has been sitting in review for three days, it shows up. When a service hasn’t deployed in two weeks despite active development, that anomaly surfaces.

Drill-down capabilities matter enormously. A VP of Engineering needs the organizational view: are we improving quarter over quarter? A team lead needs to see their specific repositories. An individual contributor wants to know which of their PRs need attention. Modern stream management software supports all these perspectives, enabling teams to move from org-level views to specific pull requests that are blocking delivery.

Comparison use cases like benchmarking squads or product areas are valuable, but a warning: using metrics to blame individuals destroys trust and undermines the entire value stream management process. Focus on systems, not people.

Essential widgets for a modern VSM dashboard:

Typo surfaces these value stream metrics automatically and flags anomalies—like sudden spikes in PR review times after introducing a new process or approval requirement. This enables teams to catch process improvements before they plateau.

DORA (DevOps Research and Assessment) established four key metrics that have become the industry standard for measuring software delivery performance: deployment frequency, lead time for changes, mean time to restore, and change failure rate. These metrics emerged from years of research correlating specific practices with organizational performance.

Stream management solutions automatically collect DORA metrics without requiring manual spreadsheets or data entry. By connecting to Git repositories, CI/CD pipelines, and incident management tools, they generate accurate measurements based on actual events—commits merged, deployments executed, incidents opened and closed.

Typo’s approach to DORA includes out-of-the-box dashboards showing all four metrics with historical trends spanning months and quarters. Teams can see not just their current state but their trajectory. Are deployments becoming more frequent while failure rates stay stable? That’s a sign of genuine improvement efforts paying off.

For engineering leaders, DORA metrics provide a common language for communicating performance to business stakeholders. Instead of abstract discussions about technical debt or velocity, you can report that deployment frequency increased 3x between Q1 and Q3 2025 while maintaining stable failure rates—a clear signal that continuous delivery investments are working.

DORA metrics are a starting point, not a destination. Mature value stream management implementations complement them with additional flow, quality, and developer experience metrics.

How leaders use DORA metrics to drive decisions:

See engineering metrics for a boardroom perspective.

Combining quantitative data (cycle time, failures) with qualitative data (developer feedback, perceived friction) gives a fuller picture of flow efficiency measures. Numbers tell you what’s happening; surveys tell you why.

Typo includes developer experience surveys and correlates responses with delivery metrics to uncover root causes of burnout or frustration. When a team reports low satisfaction and analytics reveal they spend 60% of time on incident response, the path forward becomes clear.

Value stream analytics is the analytical layer on top of raw metrics, helping teams understand where time is spent and where work gets stuck. Metrics tell you that cycle time is 8 days; analytics tells you that 5 of those days are spent waiting for review.

When analytics are sliced by team, repo, project, or initiative, they reveal systemic issues. Perhaps one service has consistently slow reviews because its codebase is complex and few people understand it. Maybe another team’s PRs are oversized, taking days to review properly. Or flaky tests might cause deployment failures that require manual intervention. Learn more about the limitations of JIRA dashboards and how integrating with Git can address these systemic issues.

Typo analyzes each phase of the SDLC—coding, review, testing, deploy—and quantifies their contribution to overall cycle time. This visibility enables targeted process improvements rather than generic mandates. If review time is your biggest constraint, doubling down on CI/CD automation won’t help.

Analytics also guide experiments. A team might trial smaller PRs in March-April 2025 and measure the change in review time and defect rates. Did breaking work into smaller chunks reduce cycle time? Did it affect quality? The data answers these questions definitively.

Visual patterns worth analyzing:

The connection to continuous improvement is direct. Teams use analytics to run monthly or quarterly reviews and decide the next constraint to tackle. This echoes Lean thinking and the Theory of Constraints: find the bottleneck, improve it, then find the next one. Organizations that drive continuous improvement using this approach see 20-50% reductions in cycle times, according to industry benchmarks.

Typo can automatically spot these patterns and suggest focus areas—flagging repos with consistently slow reviews or high failure rates after deploy—so teams know where to start without manual analysis.

Value stream forecasting predicts delivery timelines, capacity, and risk based on historical flow metrics and current work-in-progress. Instead of relying on developer estimates or story point calculations, it uses actual delivery data to project when work will complete.

AI-powered tools analyze past work—typically the last 6-12 months of cycle time data—to forecast when a specific epic, feature, or initiative is likely to be delivered. The key difference from traditional estimation: these forecasts improve automatically as more data accumulates and patterns emerge.

Typo uses machine learning to provide probabilistic forecasts. Rather than saying “this will ship on March 15,” it might report “there’s an 80% confidence this initiative will ship before March 15, and 95% confidence it will ship before March 30.” This probabilistic approach better reflects the inherent uncertainty in software development.

Use cases for engineering leaders:

Traditional planning relies on manual estimation and story points, which are notoriously inconsistent across teams and individuals. Value stream management tools bring evidence-based forecasting using real delivery patterns—what actually happened, not what people hoped would happen.

Typo surfaces early warnings when current throughput cannot meet a committed deadline, prompting scope negotiations or staffing changes before problems compound.

Value stream mapping for software visualizes how work flows from idea to production, including the tools involved, the teams responsible, and the wait states between handoffs. It’s the practice that underlies stream visualization in modern engineering organizations.

Digital VSM tools replace ad-hoc whiteboard sessions with living maps connected to real data from Jira, Git, CI/CD, and incident systems. Instead of a static diagram that’s outdated within weeks, you have a dynamic view that reflects current reality. This is stream mapping updated for the complexity of modern software development.

Value stream management platforms visually highlight handoffs, queues, and rework steps that generate friction. When a deployment requires three approval stages, each creating wait time, the visualization makes that cost visible. When work bounces between teams multiple times before shipping, the rework pattern emerges. These friction points are key drivers measured by DORA metrics, which provide deeper insights into software delivery performance.

The organizational benefits extend beyond efficiency. Visualization creates shared understanding across cross functional teams, improves collaboration by making dependencies explicit, and clarifies ownership of each stage. When everyone sees the same picture, alignment becomes easier.

Example visualizations to describe: See the DORA Lab #02 episode featuring Marian Kamenistak on engineering metrics for insights on visualizing engineering performance data.

Visualization alone is not enough. It must be paired with outcome goals and continuous improvement cycles. A beautiful map of a broken process is still a broken process.

Software delivery typically has two dominant flows: the “happy path” (features and enhancements) and the “recovery stream” (incidents, hotfixes, and urgent changes). Treating them identically obscures important differences in how work should move.

A VSM tool should visualize both value streams distinctly, with different metrics and priorities for each. Feature work optimizes for faster value delivery while maintaining quality. Incident response optimizes for stability and speed to resolution.

Example: Track lead time for new capabilities in a product area—targeting continuous improvement toward shorter cycles. Separately, track MTTR for production outages in critical services—targeting reliability and rapid recovery. The desired outcomes differ, so the measurements should too.

Typo can differentiate incident-related work from roadmap work based on labels, incident links, or branches, giving leaders full visibility into where engineering time is really going. This prevents the common problem where incident overload is invisible because it’s mixed into general delivery metrics.

Mapping information flow—Slack conversations, ticket comments, documentation reviews—not just code flow, exposes communication breakdowns and approval delays. A pull request might be ready for review, but if the notification gets lost in Slack noise, it sits idle.

Example: A release process required approval from security, QA, and the production SRE before deployment. Each approval added an average of 6 hours of wait time. By removing one approval stage (shifting security review to an earlier, async process), the team improved cycle time by nearly a full day.

Typo correlates wait times in different stages—“in review,” “awaiting QA,” “pending deployment”—with overall cycle time, helping teams quantify the impact of each handoff. This turns intuitions about slow processes into concrete data supporting streamlining operations.

Handoffs to analyze:

Learn more about how you can measure work patterns and boost developer experience with Typo.

Visualizations and metrics only matter if they lead to specific improvement experiments and measurable outcomes. A dashboard that no one acts on is just expensive decoration.

The improvement loop is straightforward: identify constraint → design experiment → implement change for a fixed period (4-6 weeks) → measure impact → decide whether to adopt permanently. This iterative process respects the complexity of software systems while maintaining momentum toward desired outcomes.

Selecting a small number of focused initiatives works better than trying to improve everything at once. “Reduce PR review time by 30% this quarter” is actionable. “Improve engineering efficiency” is not. Focus on initiatives within the team’s control that connect to business value.

Actions tied to specific metrics:

Involve cross-functional stakeholders—product, SRE, security—in regular value stream reviews. Making improvements part of a shared ritual encourages cross functional collaboration and ensures changes stick. This is how stream management requires organizational commitment beyond just the engineering team.

Example journey: A 200-person engineering organization adopted a value stream management platform in early 2025. At baseline, their average cycle time was 11 days, deployment frequency was twice weekly, and developer satisfaction scored 6.2/10. By early 2026, after three improvement cycles focusing on review time, WIP limits, and deployment automation, they achieved 4-day cycle time, daily deployments, and 7.8 satisfaction. The longitudinal analysis in Typo made these gains visible and tied them to specific investments.

Selecting a stream management platform is a significant decision for engineering organizations. The right tool accelerates improvement efforts; the wrong one becomes shelfware.

Evaluation criteria:

Typo differentiates itself with AI-based code reviews, AI impact measurement (tracking how tools like Copilot affect delivery speed and quality), and integrated developer experience surveys—capabilities that go beyond standard VSM features. For teams adopting AI coding assistants, understanding their impact on flow efficiency measures is increasingly critical.

Before committing, run a time-boxed pilot (60-90 days) with 1-2 teams. The goal: validate whether the tool provides actionable insights that drive actual behavior change, not just more charts.

Homegrown dashboards vs. specialized platforms:

Ready to see your own value stream metrics? Start Free Trial to connect your tools and baseline your delivery performance within days, not months. Or Book a Demo to walk through your specific toolchain with a Typo specialist.

Weeks 1: Connect tools

Weeks 2-3: Baseline metrics

Week 4: Choose initial outcomes

Weeks 5-8: Run first improvement experiment

Weeks 9-10: Review results

Change management tips:

Value stream management tools transform raw development data into a strategic advantage when paired with consistent improvement practices and organizational commitment. The benefits of value stream management extend beyond efficiency—they create alignment between engineering execution and business objectives, encourage cross functional collaboration, and provide the visibility needed to make confident decisions about where to invest.

The difference between teams that ship predictably and those that struggle often comes down to visibility and the discipline to act on what they see. By implementing a value stream management process grounded in real data, you can move from reactive firefighting to proactive optimizing flow across your entire software delivery lifecycle.

Start your free trial with Typo to see your value streams clearly—and start shipping with confidence.

Value Stream Management (VSM) is a foundational approach for organizations seeking to optimize value delivery across the entire software development lifecycle. At its core, value stream management is about understanding and orchestrating the flow of work—from the spark of idea generation to the moment a solution reaches the customer. By applying value stream management VSM principles, teams can visualize the entire value stream, identify bottlenecks, and drive continuous improvement in their delivery process.

The value stream mapping process is central to VSM, providing a clear, data-driven view of how value moves through each stage of development. This stream mapping enables organizations to pinpoint inefficiencies, streamline operations, and ensure that every step in the process contributes to business objectives and customer satisfaction. Effective stream management requires not only the right tools but also a culture of collaboration and a commitment to making data-driven decisions.

By embracing value stream management, organizations empower cross-functional teams to align their efforts, optimize flow, and deliver value more predictably. The result is a more responsive, efficient, and customer-focused delivery process—one that adapts to change and continuously improves over time.

A value stream represents the complete sequence of activities that transform an initial idea into a product or service delivered to the customer. In software delivery, understanding value streams means looking beyond individual tasks or teams and focusing on the entire value stream—from concept to code, and from deployment to customer feedback.

Value stream mapping is a powerful technique for visualizing this journey. By creating a visual representation of the value stream, teams can see where work slows down, where handoffs occur, and where opportunities for improvement exist. This stream mapping process helps organizations measure flow, track progress, and ensure that every step is aligned with desired outcomes.

When teams have visibility into the entire value stream, they can identify bottlenecks, optimize delivery speed, and improve customer satisfaction. Value stream mapping not only highlights inefficiencies but also uncovers areas where automation, process changes, or better collaboration can make a significant impact. Ultimately, understanding value streams is essential for any organization committed to streamlining operations and delivering high-quality software at pace.

The true power of value stream management lies in its ability to connect day-to-day software delivery with broader business outcomes. By focusing on the value stream management process, organizations ensure that every improvement effort is tied to customer value and strategic objectives.

Key performance indicators such as lead time, deployment frequency, and cycle time provide measurable insights into how effectively teams are delivering value. When cross-functional teams share a common understanding of the value stream, they can collaborate to identify areas for streamlining operations and optimizing flow. This alignment is crucial for driving customer satisfaction and achieving business growth.

Stream management is not just about tracking metrics—it’s about using those insights to make informed decisions that enhance customer value and support business objectives. By continuously refining the delivery process and focusing on outcomes that matter, organizations can improve efficiency, accelerate time to market, and ensure that software delivery is a true driver of business success.

Adopting value stream management is not without its hurdles. Many organizations face challenges such as complex processes, multiple tools that don’t communicate, and data silos that obscure the flow of work. These obstacles can make it difficult to measure flow metrics, identify bottlenecks, and achieve faster value delivery.

Encouraging cross-functional collaboration and fostering a culture of continuous improvement are also common pain points. Without buy-in from all stakeholders, improvement efforts can stall, and the benefits of value stream management solutions may not be fully realized. Additionally, organizations may struggle to maintain a customer-centric focus, losing sight of customer value amid the complexity of their delivery processes.

To overcome these challenges, it’s essential to leverage stream management solutions that break down data silos, integrate multiple tools, and provide actionable insights. By prioritizing data-driven decision making, optimizing flow, and streamlining processes, organizations can unlock the full potential of value stream management and drive meaningful business outcomes.

Modern engineering teams that excel in software delivery consistently apply value stream management principles and foster a culture of continuous improvement. The most effective teams visualize the entire value stream, measure key metrics such as lead time and deployment frequency, and use these insights to identify and address bottlenecks.

Cross-functional collaboration is at the heart of successful stream management. By bringing together diverse perspectives and encouraging open communication, teams can drive continuous improvement and deliver greater customer value. Data-driven decision making ensures that improvement efforts are targeted and effective, leading to faster value delivery and better business outcomes.

Adopting value stream management solutions enables teams to streamline operations, improve flow efficiency, and reduce lead time. The benefits of value stream management are clear: increased deployment frequency, higher customer satisfaction, and a more agile response to changing business needs. By embracing these best practices, modern engineering teams can deliver on their promises, achieve strategic objectives, and create lasting value for their customers and organizations.

A value stream map is more than just a diagram—it’s a strategic tool that brings clarity to your entire software delivery process. By visually mapping every step from idea generation to customer delivery, engineering teams gain a holistic view of how value flows through their organization. This stream mapping process is essential for identifying bottlenecks, eliminating waste, and ensuring that every activity contributes to business objectives and customer satisfaction.

Continuous Delivery (CD) is at the heart of modern software development, enabling teams to release new features and improvements to customers quickly and reliably. By integrating value stream management (VSM) tools into the continuous delivery pipeline, organizations gain end-to-end visibility across the entire software delivery lifecycle. This integration empowers teams to identify bottlenecks, optimize flow efficiency measures, and make data-driven decisions that accelerate value delivery.

With VSM tools, engineering teams can automate the delivery process, reducing manual handoffs and minimizing lead time from code commit to production deployment. Real-time dashboards and analytics provide actionable insights into key performance indicators such as deployment frequency, flow time, and cycle time, allowing teams to continuously monitor and improve their delivery process. By surfacing flow metrics and highlighting areas for improvement, VSM tools drive continuous improvement and help teams achieve higher deployment frequency and faster feedback loops.

The combination of continuous delivery and value stream management VSM ensures that every release is aligned with customer value and business objectives. Teams can track the impact of process changes, measure flow efficiency, and ensure that improvements translate into tangible business outcomes. Ultimately, integrating VSM tools with continuous delivery practices enables organizations to deliver software with greater speed, quality, and confidence—turning the promise of seamless releases into a reality.

Organizations across industries are realizing transformative results by adopting value stream management (VSM) tools to optimize their software delivery processes. For example, a leading financial services company implemented value stream management VSM to gain visibility into their delivery process, resulting in a 50% reduction in lead time and a 30% increase in deployment frequency. By leveraging stream management solutions, they were able to identify bottlenecks, streamline operations, and drive continuous improvement across cross-functional teams.

In another case, a major retailer turned to VSM tools to enhance customer experience and satisfaction. By mapping their entire value stream and focusing on flow efficiency measures, they achieved a 25% increase in customer satisfaction within just six months. The ability to track key metrics and align improvement efforts with business outcomes enabled them to deliver value to customers faster and more reliably.

These real-world examples highlight how value stream management empowers organizations to improve delivery speed, reduce waste, and achieve measurable business outcomes. By embracing stream management and continuous improvement, companies can transform their software delivery, enhance customer satisfaction, and maintain a competitive edge in today’s fast-paced digital landscape.

Achieving excellence in value stream management (VSM) requires ongoing learning, the right tools, and access to a vibrant community of practitioners. For organizations and key stakeholders looking to deepen their expertise, a wealth of resources is available to support continuous improvement and optimize the entire value stream.

By leveraging these resources, organizations can empower cross-functional teams, break down data silos, and foster a culture of data-driven decision making. Continuous engagement with the VSM community and ongoing investment in stream management software ensure that improvement efforts remain aligned with business objectives and customer value—driving sustainable success across the entire value stream.

Software development life cycle phases are the structured stages that guide software projects from initial planning through deployment and maintenance. These seven key phases provide a systematic framework that transforms business requirements into high quality software while maintaining control over costs, timelines, and project scope.

Understanding and properly executing these phases ensures systematic, high-quality software delivery that aligns with business objectives and user requirements.

What This Guide Covers

This guide examines the seven core SDLC phases, their specific purposes and deliverables, and proven implementation strategies. We cover traditional and agile approaches to phase management but exclude specific programming languages, tools, or vendor-specific methodologies.

Who This Is For

This guide is designed for software developers, project managers, team leads, and stakeholders involved in software projects. Whether you’re managing your first software development project or looking to optimize existing development processes, you’ll find actionable frameworks for improving project outcomes.

Why This Matters

Proper SDLC phase execution reduces project risks by 40% according to industry research, ensures on-time delivery, and creates alignment between development teams and business stakeholders. Organizations following structured SDLC processes report 45% fewer critical defects compared to those using ad hoc development approaches.

What You’ll Learn:

Software development life cycle phases are structured checkpoints that transform business ideas into functional software through systematic progression. The SDLC is composed of distinct development stages, with each stage contributing to the overall process by addressing specific aspects of software creation, from requirements gathering to deployment and maintenance. Each development phase serves as a quality gate, ensuring that teams complete essential work before advancing to subsequent stages. The software development life cycle (SDLC) is used by software engineers to plan, design, develop, test, and maintain software applications.

Phase-based development reduces project complexity by breaking large initiatives into manageable segments. This structured process enables quality control at each stage and provides stakeholders with clear visibility into project progress and decision points.

The seven key phases interconnect through defined deliverables and feedback loops, where outputs from each previous phase become inputs for the following development stage.

Definition: The planning phase establishes project scope, objectives, and resource requirements through collaborative stakeholder analysis. This initial development stage defines what success looks like and creates the foundation for all project decisions.

Key deliverables: Project charter documenting business objectives, initial requirements gathering from stakeholders, feasibility assessment covering technical and financial constraints, and comprehensive resource allocation plans detailing team structure and timeline.

Connection to overall SDLC: This phase sets the foundation for all subsequent phases by defining measurable success criteria and establishing the framework for requirements analysis and system design.

Definition: The requirements analysis phase involves detailed gathering and documentation of functional and non-functional requirements that define what the software solution must accomplish.

Key deliverables: Software Requirement Specification document (SRS) containing detailed system requirements, user stories with acceptance criteria for agile development teams, system constraints covering performance and security needs, and traceability matrices linking requirements to business objectives.

Building on planning: This phase transforms high-level project goals from the planning phase into specific, measurable requirements that guide system design and development work.

Definition: The design phase creates technical blueprints that translate requirements into implementable system architecture, defining how the software solution will function at a technical level. At this stage, software engineering plays a critical role as the development team is responsible for building the framework, defining functionality, and outlining the structure and interfaces to ensure the software's efficiency, usability, and integration readiness.

Key deliverables: System architecture diagrams showing component relationships, database design with entity relationships and data flows, UI/UX mockups for user interfaces, and detailed technical specifications guiding implementation teams.

Unlike previous phases: This development stage shifts focus from defining “what” the system should do to designing “how” the system will work technically, bridging requirements and actual software development.

Transition: With design specifications complete, development teams can begin the implementation phase where designs become functional code.

The transition from design to development represents a critical shift where technical specifications guide the creation of actual software components, followed by systematic validation to ensure quality standards.

Definition: The implementation phase converts design documents into functional code using selected programming languages and development frameworks, transforming technical specifications into working software modules. AI can enhance the SDLC by automating repetitive tasks and predicting potential issues in the software development process.

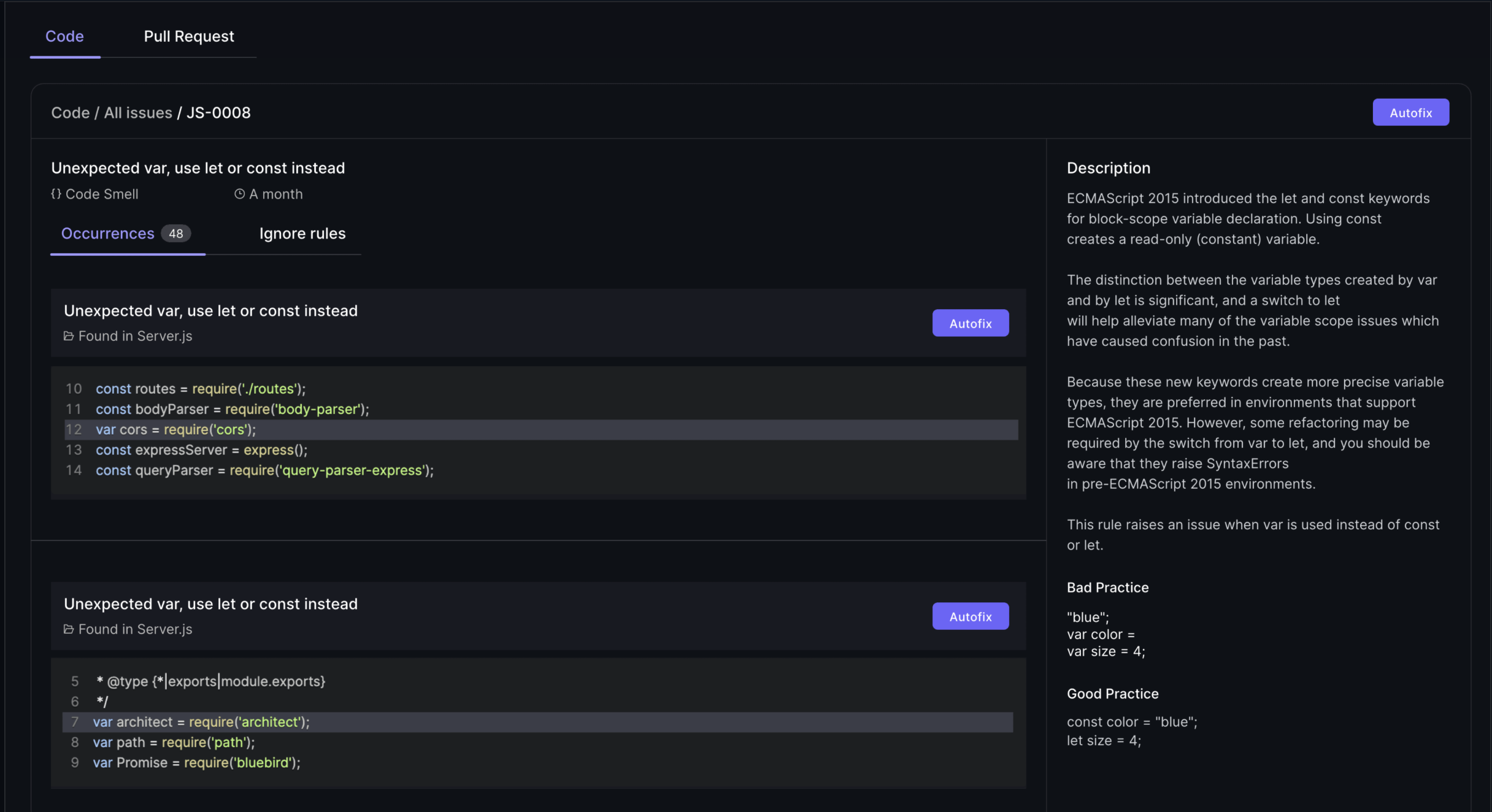

Key activities: Development teams break down system modules into manageable coding tasks with clear deadlines and dependencies. Software engineers write code following established coding standards while implementing version control processes to maintain code quality and enable team collaboration. AI-powered code reviews can streamline review and feedback, and AI can generate reusable code snippets to assist developers.

Quality management: Code review processes ensure that multiple developers validate each component before integration, while continuous integration practices automatically test code changes as development progresses.

Definition: The testing phase provides systematic verification that software components meet established requirements through comprehensive unit testing, integration testing, system testing, and user acceptance testing. Software testing is a critical component of the SDLC, playing a key role in quality assurance and ensuring the reliability of the software before deployment.

Testing process: Quality assurance teams identify bugs through structured testing scenarios, document defects with reproduction steps, and collaborate with development teams to fix bugs identified during testing. This corresponding testing phase validates not only functional requirements but also performance benchmarks, security standards, security testing to identify vulnerabilities and ensure software robustness, and usability criteria.

Quality gates: Testing environment validation ensures software quality before any deployment to production environment, with automated testing frameworks providing continuous validation throughout development cycles.

Definition: The deployment phase manages the controlled release of tested software to production environments while minimizing disruption to existing users and business operations. The deployment phase involves rolling out the tested software to end users, which may include a beta-testing phase or pilot launch.

Release management: Deployment teams coordinate user training sessions, deliver comprehensive documentation for system administrators, and activate support systems to handle post-release questions and issues. The software release life cycle encompasses these stages, including deployment, continuous delivery, and post-release management, ensuring a structured approach to software launches.

Risk mitigation: Teams implement rollback procedures and monitoring systems to ensure post-deployment stability, with continuous delivery practices enabling rapid response to production issues.

Definition: The maintenance phase provides ongoing support through bug fixes, performance optimization, and feature enhancements based on user requirements and changing business needs.

Continuous improvement: Development teams integrate customer feedback into enhancement planning while maintaining system evolution strategies that adapt to new technologies and market requirements.

Long-term sustainability: This phase often consumes up to 60% of total software development lifecycle costs, making efficient maintenance processes critical for project success.

Transition: Different projects require varying approaches to executing these phases based on complexity, timeline, and organizational constraints.

Different software projects require tailored approaches to executing development life cycle phases, with various methodologies offering distinct advantages for specific project characteristics and team capabilities. Compared to other lifecycle management methodologies, SDLC provides a structured framework, but alternatives may emphasize flexibility, rapid iteration, or continuous delivery, depending on organizational needs and project goals.

Waterfall model approach: Linear progression through phases with formal quality gates and comprehensive documentation requirements at each stage. Traditional software development using this SDLC model works well for complex projects with stable requirements and regulatory compliance needs. Waterfall is ideal for smaller projects with well-defined requirements and minimal client involvement. The V-shaped model is best for time-limited projects with highly specific requirements prioritizing testing and quality assurance.

Agile methodology approach: Iterative process that compresses multiple phases into rapid development cycles called sprints, enabling development teams to respond quickly to changing customer expectations and market feedback. Agile is ideal for large, complex projects that require frequent changes and close collaboration with multiple stakeholders. The Iterative Model enables better control of scope, time, and resources, but it may lead to technical debt if errors are not addressed early.

Hybrid models: Many software development teams combine structured planning phases with flexible implementation approaches, maintaining comprehensive requirements analysis while enabling iterative development and continuous delivery practices.

DevOps** integration:** Modern development and operations teams break down traditional silos between development, testing, and deployment phases through automation and continuous collaboration throughout the development lifecycle. DevOps is perfect for teams seeking continuous integration and deployment in large projects, emphasizing long-term maintenance.

Continuous Integration/Continuous Deployment (CI/CD): These practices merge development phase work with testing and deployment activities, enabling rapid application development while maintaining software quality standards.

Quality gates: Development teams establish defined checkpoints that ensure phase completion criteria before progression, maintaining systematic control while enabling flexibility within individual phases.

Transition: Selecting the right approach requires careful assessment of project characteristics and organizational capabilities.

Leveraging continuous delivery methodologies represents a transformative paradigm shift within software development workflows that empowers development teams to deliver high-caliber software solutions through optimized velocity and precision. By streamlining and automating the building, testing, and deployment pipelines, continuous delivery ensures that every code modification undergoes rigorous validation processes and remains production-ready for rapid, reliable user deployment. This sophisticated approach minimizes manual intervention points, substantially reduces the probability of human-induced errors, and accelerates the feedback loop mechanisms between development teams and end-user constituencies.

Integrating continuous delivery frameworks into development workflows enables teams to respond dynamically to customer feedback patterns, adapt seamlessly to evolving requirement specifications, and maintain consistent improvement flows into production environments. This methodology proves particularly valuable in agile development ecosystems, where rapid iteration cycles and continuous enhancement processes are fundamental for satisfying dynamic customer expectations and market demands. By optimizing the development workflow architecture, continuous delivery not only enhances software quality metrics but also reinforces the overall organizational agility and responsiveness capabilities across development and operations teams.

For organizations seeking to optimize their software development lifecycle efficiency, continuous delivery serves as a critical enabler of streamlined, reliable, and customer-centric software delivery workflows that enhance productivity while maintaining superior quality standards.

Understanding project requirements and team capabilities enables informed decisions about which software development models will best support successful project delivery within specific organizational contexts.

When to use this: Project managers and technical leads can apply this framework when planning software development initiatives or optimizing existing development processes.

Organizations should select approaches based on project stability requirements, team experience, and customer feedback integration needs. Complex projects with regulatory requirements often benefit from traditional approaches, while software applications requiring market responsiveness work well with agile methodology.

Transition: Even with optimal methodology selection, specific challenges commonly arise during SDLC phase execution.

Harnessing the power of precise metrics has fundamentally reshaped how software development teams ensure they consistently deliver exceptional software that not only achieves ambitious business objectives but also exceeds customer expectations. Strategic performance indicators such as code quality, testing coverage, defect density, and customer satisfaction unlock unprecedented insights into the effectiveness and efficiency of development processes, creating a powerful foundation for continuous improvement.

Through systematic monitoring of these transformative metrics, development teams can uncover hidden opportunities for process optimization, strengthen cross-functional collaboration, and ensure their software development workflows consistently deliver exceptional value that resonates with customers and drives business success.

Leveraging a comprehensive toolkit has transformed how modern software development teams navigate every stage of the development process, ensuring the delivery of exceptional software solutions. These powerful tools reshape collaboration dynamics, streamline complex workflows, and provide unprecedented visibility into project trajectories.

Let's dive into how these essential tools optimize development processes and drive success across teams:

By harnessing these transformative tools, software development teams can optimize their entire development ecosystem, enhance cross-functional communication, and deliver robust, reliable software solutions that meet the demanding requirements of today's rapidly evolving technological landscape.

These challenges affect software project success regardless of chosen development lifecycle methodology, requiring proactive management strategies to maintain project momentum and software quality.

Solution: Implement formal change control processes with comprehensive impact assessment procedures that evaluate how requirement changes affect timeline, budget, and technical architecture decisions.

Development teams should establish clear stakeholder communication protocols and expectation management frameworks that document all requirement changes and their implications for subsequent development phases.

Solution: Establish automated testing frameworks during the design phase and define specific coverage metrics that ensure comprehensive unit testing, integration testing, and system testing throughout the development process.

Quality assurance teams should integrate test planning with development phase activities, creating testing environments that parallel production environment configurations and enable continuous validation of software components.

Solution: Create standardized handoff procedures with detailed deliverable checklists that ensure complete information transfer between development teams working on different SDLC phases.

Implement documentation standards that support effective collaboration between software engineers, project management teams, and stakeholders throughout the systems development lifecycle.

Transition: Addressing these challenges systematically creates the foundation for consistent project success.

Mastering software development life cycle phases provides the foundation for consistent, successful software delivery that aligns development team efforts with business objectives while maintaining high quality software standards throughout the development process. A system typically consists of integrated hardware and software components that work together to perform complex functions, and a structured SDLC is essential to ensure these components are effectively coordinated to achieve advanced operational goals.

To get started:

Related Topics: Explore specific SDLC models like the spiral model for high-risk projects, DevOps integration for continuous delivery, and lifecycle management methodologies that support complex software solutions requiring ongoing maintenance and evolution.

Software product metrics measure quality, performance, and user satisfaction, aligning with business goals to improve your software. This article explains essential metrics and their role in guiding development decisions.

Software product metrics are quantifiable measurements that assess various characteristics and performance aspects of software products. These metrics are designed to align with business goals, add user value, and ensure the proper functioning of the product. Tracking these critical metrics ensures your software meets quality standards, performs reliably, and fulfills user expectations. User Satisfaction metrics include Net Promoter Score (NPS), Customer Satisfaction Score (CSAT), and Customer Effort Score (CES), which provide valuable insights into user experiences and satisfaction levels. User Engagement metrics include Active Users, Session Duration, and Feature Usage, which help teams understand how users interact with the product. Additionally, understanding software metric product metrics in software is essential for continuous improvement.

Evaluating quality, performance, and effectiveness, software metrics guide development decisions and align with user needs. They provide insights that influence development strategies, leading to enhanced product quality and improved developer experience and productivity. These metrics help teams identify areas for improvement, assess project progress, and make informed decisions to enhance product quality.

Quality software metrics reduce maintenance efforts, enabling teams to focus on developing new features and enhancing user satisfaction. Comprehensive insights into software health help teams detect issues early and guide improvements, ultimately leading to better software. These metrics serve as a compass, guiding your development team towards creating a robust and user-friendly product.

Software quality metrics are essential quantitative indicators that evaluate the quality, performance, maintainability, and complexity of software products. These quantifiable measures enable teams to monitor progress, identify challenges, and adjust strategies in the software development process. Additionally, metrics in software engineering play a crucial role in enhancing overall software product’s quality.

By measuring various aspects such as functionality, reliability, and usability, quality metrics ensure that software systems meet user expectations and performance standards. The following subsections delve into specific key metrics that play a pivotal role in maintaining high code quality and software reliability.

Defect density is a crucial metric that helps identify problematic areas in the codebase by measuring the number of defects per a specified amount of code. Typically measured in terms of Lines of Code (LOC), a high defect density indicates potential maintenance challenges and higher defect risks. Pinpointing areas with high defect density allows development teams to focus on improving those sections, leading to a more stable and reliable software product and enhancing defect removal efficiency.

Understanding and reducing defect density is essential for maintaining high code quality. It provides a clear picture of the software’s health and helps teams prioritize bug fixes and software defects. Consistent monitoring allows teams to proactively address issues, enhancing the overall quality and user satisfaction of the software product.

Code coverage is a metric that assesses the percentage of code executed during testing, ensuring adequate test coverage and identifying untested parts. Static analysis tools like SonarQube, ESLint, and Checkstyle play a crucial role in maintaining high code quality by enforcing consistent coding practices and detecting potential vulnerabilities before runtime. These tools are integral to the software development process, helping teams adhere to code quality standards and reduce the likelihood of defects.

Maintaining high code quality through comprehensive code coverage leads to fewer defects and improved code maintainability. Software quality management platforms that facilitate code coverage analysis include:

The Maintainability Index is a metric that provides insights into the software’s complexity, readability, and documentation, all of which influence how easily a software system can be modified or updated. Metrics such as cyclomatic complexity, which measures the number of linearly independent paths in code, are crucial for understanding the complexity of the software. High complexity typically suggests there may be maintenance challenges ahead. It also indicates a greater risk of defects.

Other metrics like the Length of Identifiers, which measures the average length of distinct identifiers in a program, and the Depth of Conditional Nesting, which measures the depth of nesting of if statements, also contribute to the Maintainability Index. These metrics help identify areas that may require refactoring or documentation improvements, ultimately enhancing the maintainability and longevity of the software product.

Performance and reliability metrics are vital for understanding the software’s ability to perform under various conditions over time. These metrics provide insights into the software’s stability, helping teams gauge how well the software maintains its operational functions without interruption. By implementing rigorous software testing and code review practices, teams can proactively identify and fix defects, thereby improving the software’s performance and reliability.

The following subsections explore specific essential metrics that are critical for assessing performance and reliability, including key performance indicators and test metrics.

Mean Time Between Failures (MTBF) is a key metric used to assess the reliability and stability of a system. It calculates the average time between failures, providing a clear indication of how often the system can be expected to fail. A higher MTBF indicates a more reliable system, as it means that failures occur less frequently.

Tracking MTBF helps teams understand the robustness of their software and identify potential areas for improvement. Analyzing this metric helps development teams implement strategies to enhance system reliability, ensuring consistent performance and meeting user expectations.

Mean Time to Repair (MTTR) reflects the average duration needed to resolve issues after system failures occur. This metric encompasses the total duration from system failure to restoration, including repair and testing times. A lower MTTR indicates that the system can be restored quickly, minimizing downtime and its impact on users. Additionally, Mean Time to Recovery (MTTR) is a critical metric for understanding how efficiently services can be restored after a failure, ensuring minimal disruption to users.

Understanding MTTR is crucial for evaluating the effectiveness of maintenance processes. It provides insights into how efficiently a development team can address and resolve issues, ultimately contributing to the overall reliability and user satisfaction of the software product.

Response time measures the duration taken by a system to react to user commands, which is crucial for user experience. A shorter response time indicates a more responsive system, enhancing user satisfaction and engagement. Measuring response time helps teams identify performance bottlenecks that may negatively affect user experience.

Ensuring a quick response time is essential for maintaining high user satisfaction and retention rates. Performance monitoring tools can provide detailed insights into response times, helping teams optimize their software to deliver a seamless and efficient user experience.

User engagement and satisfaction metrics are vital for assessing how users interact with a product and can significantly influence its success. These metrics provide critical insights into user behavior, preferences, and satisfaction levels, helping teams refine product features to enhance user engagement.

Tracking these metrics helps development teams identify areas for improvement and ensures the software meets user expectations. The following subsections explore specific metrics that are crucial for understanding user engagement and satisfaction.

Net Promoter Score (NPS) is a widely used gauge of customer loyalty, reflecting how likely customers are to recommend a product to others. It is calculated by subtracting the percentage of detractors from the percentage of promoters, providing a clear metric for customer loyalty. A higher NPS indicates that customers are more satisfied and likely to promote the product.

Tracking NPS helps teams understand customer satisfaction levels and identify areas for improvement. Focusing on increasing NPS helps development teams enhance user satisfaction and retention, leading to a more successful product.

The number of active users reflects the software’s ability to retain user interest and engagement over time. Tracking daily, weekly, and monthly active users helps gauge the ongoing interest and engagement levels with the software. A higher number of active users indicates that the software is effectively meeting user needs and expectations.

Understanding and tracking active users is crucial for improving user retention strategies. Analyzing user engagement data helps teams enhance software features and ensure the product continues to deliver value.

Tracking how frequently specific features are utilized can inform development priorities based on user needs and feedback. Analyzing feature usage reveals which features are most valued and frequently utilized by users, guiding targeted enhancements and prioritization of development resources.

Monitoring specific feature usage helps development teams gain insights into user preferences and behavior. This information helps identify areas for improvement and ensures that the software evolves in line with user expectations and demands.

Financial metrics are essential for understanding the economic impact of software products and guiding business decisions effectively. These metrics help organizations evaluate the economic benefits and viability of their software products. Tracking financial metrics helps development teams make informed decisions that contribute to the financial health and sustainability of the software product. Tracking metrics such as MRR helps Agile teams understand their product's financial health and growth trajectory.

The following subsections explore specific financial metrics that are crucial for evaluating software development.

Customer Acquisition Cost (CAC) represents the total cost of acquiring a new customer, including marketing expenses and sales team salaries. It is calculated by dividing total sales and marketing costs by the number of new customers acquired. A high customer acquisition costs (CAC) shows that targeted marketing strategies are necessary. It also suggests that enhancements to the product’s value proposition may be needed.

Understanding CAC is crucial for optimizing marketing efforts and ensuring that the cost of acquiring new customers is sustainable. Reducing CAC helps organizations improve overall profitability and ensure the long-term success of their software products.

Customer lifetime value (CLV) quantifies the total revenue generated from a customer. This measurement accounts for the entire duration of their relationship with the product. It is calculated by multiplying the average purchase value by the purchase frequency and lifespan. A healthy ratio of CLV to CAC indicates long-term value and sustainable revenue.

Tracking CLV helps organizations assess the long-term value of customer relationships and make informed business decisions. Focusing on increasing CLV helps development teams enhance customer satisfaction and retention, contributing to the financial health of the software product.

Monthly recurring revenue (MRR) is predictable revenue from subscription services generated monthly. It is calculated by multiplying the total number of paying customers by the average revenue per customer. MRR serves as a key indicator of financial health, representing consistent monthly revenue from subscription-based services.

Tracking MRR allows businesses to forecast growth and make informed financial decisions. A steady or increasing MRR indicates a healthy subscription-based business, while fluctuations may signal the need for adjustments in pricing or service offerings.

Selecting the right metrics for your project is crucial for ensuring that you focus on the most relevant aspects of your software development process. A systematic approach helps identify the most appropriate product metrics that can guide your development strategies and improve the overall quality of your software. Activation rate tracks the percentage of users who complete a specific set of actions consistent with experiencing a product's core value, making it a valuable metric for understanding user engagement.

The following subsections provide insights into key considerations for choosing the right metrics.

Metrics selected should directly support the overarching goals of the business to ensure actionable insights. By aligning metrics with business objectives, teams can make informed decisions that drive business growth and improve customer satisfaction. For example, if your business aims to enhance user engagement, tracking metrics like active users and feature usage will provide valuable insights.

A data-driven approach ensures that the metrics you track provide objective data that can guide your marketing strategy, product development, and overall business operations. Product managers play a crucial role in selecting metrics that align with business goals, ensuring that the development team stays focused on delivering value to users and stakeholders.

Clear differentiation between vanity metrics and actionable metrics is essential for effective decision-making. Vanity metrics may look impressive but do not provide insights or drive improvements. In contrast, actionable metrics inform decisions and strategies to enhance software quality. Vanity Metrics should be avoided; instead, focus on actionable metrics tied to business outcomes to ensure meaningful progress and alignment with organizational goals.

Using the right metrics fosters a culture of accountability and continuous improvement within agile teams. By focusing on actionable metrics, development teams can track progress, identify areas for improvement, and implement changes that lead to better software products. This balance is crucial for maintaining a metrics focus that drives real value.

As a product develops, the focus should shift to metrics that reflect user engagement and retention in line with our development efforts. Early in the product lifecycle, metrics like user acquisition and activation rates are crucial for understanding initial user interest and onboarding success.

As the product matures, metrics related to user satisfaction, feature usage, and retention become more critical. Metrics should evolve to reflect the changing priorities and challenges at each stage of the product lifecycle.

Continuous tracking and adjustment of metrics ensure that development teams remain focused on the most relevant aspects of project management in the software, leading to sustained tracking product metrics success.

Having the right tools for tracking and visualizing metrics is essential for automatically collecting raw data and providing real-time insights. These tools act as diagnostics for maintaining system performance and making informed decisions.

The following subsections explore various tools that can help track software metrics and visualize process metrics and software metrics effectively.

Static analysis tools analyze code without executing it, allowing developers to identify potential bugs and vulnerabilities early in the development process. These tools help improve code quality and maintainability by providing insights into code structure, potential errors, and security vulnerabilities. Popular static analysis tools include Typo, SonarQube, which provides comprehensive code metrics, and ESLint, which detects problematic patterns in JavaScript code.

Using static analysis tools helps development teams enforce consistent coding practices and detect issues early, ensuring high code quality and reducing the likelihood of software failures.

Dynamic analysis tools execute code to find runtime errors, significantly improving software quality. Examples of dynamic analysis tools include Valgrind and Google AddressSanitizer. These tools help identify issues that may not be apparent in static analysis, such as memory leaks, buffer overflows, and other runtime errors.

Incorporating dynamic analysis tools into the software engineering development process helps ensure reliable software performance in real-world conditions, enhancing user satisfaction and reducing the risk of defects.

Performance monitoring tools track performance, availability, and resource usage. Examples include:

Insights from performance monitoring tools help identify performance bottlenecks and ensure adherence to SLAs. By using these tools, development teams can optimize system performance, maintain high user engagement, and ensure the software meets user expectations, providing meaningful insights.

AI coding assistants do accelerate code creation, but they also introduce variability in style, complexity, and maintainability. The bottleneck has shifted from writing code to understanding, reviewing, and validating it.

Effective AI-era code reviews require three things:

AI coding reviews are not “faster reviews.” They are smarter, risk-aligned reviews that help teams maintain quality without slowing down the flow of work.

Understanding and utilizing software product metrics is crucial for the success of any software development project. These metrics provide valuable insights into various aspects of the software, from code quality to user satisfaction. By tracking and analyzing these metrics, development teams can make informed decisions, enhance product quality, and ensure alignment with business objectives.

Incorporating the right metrics and using appropriate tools for tracking and visualization can significantly improve the software development process. By focusing on actionable metrics, aligning them with business goals, and evolving them throughout the product lifecycle, teams can create robust, user-friendly, and financially successful software products. Using tools to automatically collect data and create dashboards is essential for tracking and visualizing product metrics effectively, enabling real-time insights and informed decision-making. Embrace the power of software product metrics to drive continuous improvement and achieve long-term success.

Software product metrics are quantifiable measurements that evaluate the performance and characteristics of software products, aligning with business goals while adding value for users. They play a crucial role in ensuring the software functions effectively.

Defect density is crucial in software development as it highlights problematic areas within the code by quantifying defects per unit of code. This measurement enables teams to prioritize improvements, ultimately reducing maintenance challenges and mitigating defect risks.

Code coverage significantly enhances software quality by ensuring that a high percentage of the code is tested, which helps identify untested areas and reduces defects. This thorough testing ultimately leads to improved code maintainability and reliability.

Tracking active users is crucial as it measures ongoing interest and engagement, allowing you to refine user retention strategies effectively. This insight helps ensure the software remains relevant and valuable to its users. A low user retention rate might suggest a need to improve the onboarding experience or add new features.

AI coding reviews enhance the software development process by optimizing coding speed and maintaining high code quality, which reduces human error and streamlines workflows. This leads to improved efficiency and the ability to quickly identify and address bottlenecks.

Miscommunication and unclear responsibilities are some of the biggest reasons projects stall, especially for engineering, product, and cross-functional teams.

A survey by PMI found that 37% of project failures are caused by a lack of clearly defined roles and responsibilities. When no one knows who owns what, deadlines slip, there’s no accountability, and team trust takes a hit.

A RACI chart can change that. By clearly mapping out who is Responsible, Accountable, Consulted, and Informed, RACI charts bring structure, clarity, and speed to team workflows.

But beyond the basics, we can use automation, graph models, and analytics to build smarter RACI systems that scale. Let’s dive into how.

A RACI chart is a project management tool that clearly outlines roles and responsibilities across a team. It defines four key roles:

RACI charts can be used in many scenarios from coordinating a product launch to handling a critical incident to organizing sprint planning meetings.

While traditional relational databases can model RACI charts, graph databases are a much better fit. Graphs naturally represent complex relationships without rigid table structures, making them ideal for dynamic team environments. In a graph model:

Using a graph database like Neo4j or Amazon Neptune, teams can quickly spot patterns. For example, you can easily find individuals who are assigned too many "Responsible" tasks, indicating a risk of overload.

You can also detect tasks that are missing an "Accountable" person, helping you catch potential gaps in ownership before they cause delays.

Graphs make it far easier to deal with complex team structures and keep projects running smoothly. And as organizations and projects grow, so does the need for it.

Once you model RACI relationships, you can apply simple algorithms to detect imbalances in how work is distributed. For example, you can spot tasks missing "Consulted" or "Informed" connections, which can cause blind spots or miscommunication.

By building scoring models, you can measure responsibility density, i.e., how many tasks each person is involved in, and then flag potential issues like redundancy. If two people are marked as "Accountable" for the same task, it could cause confusion over ownership.

Using tools like Python with libraries such as Pandas and NetworkX, teams can create matrix-style breakdowns of roles versus tasks. This makes it easy to visualize overlaps, gaps, and overloaded roles, helping managers balance team workloads more effectively and ensure smoother project execution.

After clearly mapping the RACI roles, teams can automate workflows to move even faster. Assignments can be auto-filled based on project type or templates, reducing manual setup.

You can also trigger smart notifications, like sending a Slack or email alert, when a "Responsible" task has no "Consulted" input, or when a task is completed without informing stakeholders.

Tools like Zapier or Make help you automate workflows. And one of the most common use cases for this is automatically assigning a QA lead when a bug is filed or pinging a Product Manager when a feature pull request (PR) is merged.

To make full use of RACI models, you can integrate directly with popular project management tools via their APIs. Platforms like Jira, Asana, Trello, etc., allow you to extract task and assignee data in real time.

For example, a Jira API call can pull a list of stories missing an "Accountable" owner, helping project managers address gaps quickly. In Asana, webhooks can automatically trigger role reassignment if a project’s scope or timeline changes.

These integrations make it easier to keep RACI charts accurate and up to date, allowing teams to respond dynamically as projects evolve, without the need for constant manual checks or updates.

Visualizing RACI data makes it easier to spot patterns and drive better decisions. Clear visual maps surface bottlenecks like overloaded team members and make onboarding faster by showing new hires exactly where they fit. Visualization also enables smoother cross-functional reviews, helping teams quickly understand who is responsible for what across departments.

Popular libraries like D3.js, Mermaid.js, Graphviz, and Plotly can bring RACI relationships to life. Force-directed graphs are especially useful, as they visually highlight overloaded individuals or missing roles at a glance.

There could be a dashboard that dynamically pulls data from project management tools via API, updating an interactive org-task-role graph in real time. Teams could immediately see when responsibilities are unbalanced or when critical gaps emerge, making RACI a living system that actively guides better collaboration.

Collecting RACI data over time gives teams a much clearer picture of how work is actually distributed. Because at the start it might be one things and as the project evolves it becomes entirely different.

Regularly analyzing RACI data helps spot patterns early, make better staffing decisions, and ensure responsibilities stay fair and clear.

Several simple metrics can give you powerful insights. Track the average number of tasks assigned as "Responsible" or "Accountable" per person. Measure how often different teams are being consulted on projects; too little or too much could signal issues. Also, monitor the percentage of tasks that are missing a complete RACI setup, which could expose gaps in planning.

You don’t need a big budget to start. Using Python with Dash or Streamlit, you can quickly create a basic internal dashboard to track these metrics. If your company already uses Looker or Tableau, you can integrate RACI data using simple SQL queries. A clear dashboard makes it easy for managers to keep workloads balanced and projects on track.

Keeping RACI charts consistent across teams requires a mix of planning, automation, and gradual culture change. Here are some simple ways to enforce it:

RACI charts are one of those parts of management theory that actually drive results when combined with data, automation, and visualization. By clearly defining who is Responsible, Accountable, Consulted, and Informed, teams avoid confusion, reduce delays, and improve collaboration.

Integrating RACI into workflows, dashboards, and project tools makes it easier to spot gaps, balance workloads, and keep projects moving smoothly. With the right systems in place, organizations can work faster, smarter, and with far less friction across every team.

Developers want to write code, not spend time managing infrastructure. But modern software development requires agility.

Frequent releases, faster deployments, and scaling challenges are the norm. If you get stuck in maintaining servers and managing complex deployments, you’ll be slow.

This is where Platform-as-a-Service (PaaS) comes in. It provides a ready-made environment for building, deploying, and scaling applications.

In this post, we’ll explore how PaaS streamlines processes with containerization, orchestration, API gateways, and much more.

Platform-as-a-Service (PaaS) is a cloud computing model that abstracts infrastructure management. It provides a complete environment for developers to build, deploy, and manage applications without worrying about servers, storage, or networking.

For example, instead of configuring databases or managing Kubernetes clusters, developers can focus on coding. Popular PaaS options like AWS Elastic Beanstalk, Google App Engine, and Heroku handle the heavy lifting.

These solutions offer built-in tools for scaling, monitoring, and deployment - making development faster and more efficient.

PaaS simplifies software development by removing infrastructure complexities. It accelerates the application lifecycle, from coding to deployment.

Businesses can focus on innovation without worrying about server management or system maintenance.

Whether you’re a startup with a goal to launch quickly or an enterprise managing large-scale applications, PaaS offers all the flexibility and scalability you need.

Here’s why your business can benefit from PaaS:

Irrespective of the size of the business, these are the benefits that no one wants to leave on the table. This makes PaaS an easy choice for most businesses.

PaaS platforms offer a suite of components that helps teams achieve effective software delivery. From application management to scaling, these tools simplify complex tasks.

Understanding these components helps businesses build reliable, high-performance applications.

Let’s explore the key components that power PaaS environments:

Containerization tools like Docker and orchestration platforms like Kubernetes enable developers to build modular, scalable applications using microservices.

Containers package applications with their dependencies, ensuring consistent behavior across development, testing, and production.

In a PaaS setup, containerized workloads are deployed seamlessly.

For example, a video streaming service could run separate containers for user authentication, content management, and recommendations, making updates and scaling easier.

PaaS platforms often include robust orchestration tools such as Kubernetes, OpenShift, and Cloud Foundry.

These manage multi-container applications by automating deployment, scaling, and maintenance.

Features like auto-scaling, self-healing, and service discovery ensure resilience and high availability.

For the same video streaming service that we discussed above, Kubernetes can automatically scale viewer-facing services during peak hours while maintaining stable performance.

API gateways like Kong, Apigee, and AWS API Gateway act as entry points for managing external requests. They provide essential services like rate limiting, authentication, and request routing.

In a microservices-based PaaS environment, the API gateway ensures secure, reliable communication between services.

It can help manage traffic to ensure premium users receive prioritized access during high-demand events.

Deployment pipelines are the backbone of modern software development. In a PaaS environment, they automate the process of building, testing, and deploying applications.

This helps reduce manual work and accelerates time-to-market. With efficient pipelines, developers can release new features quickly and maintain application stability.

PaaS platforms integrate seamlessly with tools for Continuous Integration/Continuous Deployment (CI/CD) and Infrastructure-as-Code (IaC), streamlining the entire software lifecycle.

CI/CD automates the movement of code from development to production. Platforms like Typo, GitHub Actions, Jenkins, and GitLab CI ensure every code change is tested and deployed efficiently.

Benefits of CI/CD in PaaS:

IaC tools like Terraform, AWS CloudFormation, and Pulumi allow developers to define infrastructure using code. Instead of manual provisioning, infrastructure resources are declared, versioned, and deployed consistently.

Advantages of IaC in PaaS:

Together, CI/CD and IaC ensure smoother deployments, greater agility, and operational efficiency.

PaaS offers flexible scaling to manage application demand.

Tools like Kubernetes, AWS Elastic Beanstalk, and Azure App Services provide auto-scaling, automatically adjusting resources based on traffic.

Additionally, load balancers distribute incoming requests across instances, preventing overload and ensuring consistent performance.