In 2024 and beyond, engineering teams face a unique convergence of pressures: faster release cycles, distributed workforces, increasingly complex tech stacks, and the rapid adoption of AI-assisted coding tools like GitHub Copilot. Amid this complexity, the tech lead has emerged as the critical role that bridges high-level engineering strategy with day-to-day delivery outcomes. Without effective technical leadership, even the most talented development teams struggle to ship quality software consistently.

This article focuses on the practical responsibilities of a technical lead within Scrum, Kanban, and SAFe-style agile environments. We’re writing from the perspective of Typo, an engineering analytics platform that works closely with VPs of Engineering, Directors, and Engineering Managers who rely on Tech Leads to translate strategy and data into working software. Our goal is to give you a concrete responsibility map for the tech lead role, along with examples of how to measure impact using engineering metrics like DORA, PR analytics, and cycle time.

Here’s what we’ll cover in this guide:

A technical lead is a senior software engineer who is accountable for the technical direction, code quality, and mentoring within their team—while still actively writing code themselves. Unlike a pure manager or architect who operates at a distance, the Tech Lead stays embedded in the codebase, participating in code reviews, pairing with developers, and making hands-on technical decisions daily.

While the Technical Lead role is not explicitly defined in Scrum, it is commonly found in many software teams. The shift from roles to accountabilities in Scrum has allowed for the integration of Technical Leads without formal recognition in the Scrum Guide.

It’s important to recognize that “Tech Lead” is a role, not necessarily a job title. In many organizations, a Staff Engineer, Principal Engineer, or even a Senior Engineer may act as the TL for a squad or pod. The responsibilities remain consistent regardless of what appears on the org chart.

How this role fits into common agile frameworks varies slightly:

Let’s be explicit about what a Tech Lead is not:

Typical characteristics of a Tech Lead include:

Tech Leads must balance hands-on engineering—often spending 40-60% of their time writing code—with technical decision-making, risk management, and quality stewardship. This section breaks down the core technical responsibilities that define the role.

Key responsibilities of a Technical Lead include defining the technical direction, ensuring code quality, removing technical blockers, and mentoring developers. Technical Leads define the technical approach, select tools/frameworks, and enforce engineering standards for maintainable code. They are responsible for establishing coding standards and leading code review processes to maintain a healthy codebase. The Tech Lead is responsible for guiding architectural decisions and championing quality within the team.

Architecture and Design

The tech lead is responsible for shaping and communicating the team’s architecture, ensuring it aligns with broader platform direction and meets non-functional requirements around performance, security, and scalability. This doesn’t mean dictating every design decision from above. In self organizing teams, architecture should emerge from collective input, with the TL facilitating discussions and providing architectural direction when the team needs guidance.

For example, consider a team migrating from a monolith to a modular services architecture over 2023-2025. The Tech Lead would define the migration strategy, establish boundaries between services, create patterns for inter-service communication, and mentor developers through the transition—all while ensuring the entire team understands the rationale and can contribute to design decisions.

Technical Decision-Making

Tech Leads own or convene decisions on frameworks, libraries, patterns, and infrastructure choices. Rather than making these calls unilaterally, effective TLs use lightweight documentation like Architecture Decision Records (ADRs) to capture context, options considered, and rationale. This creates transparency and helps developers understand why certain technical decisions were made.

The TL acts as a feasibility expert, helping the product owner understand what’s technically possible within constraints. When a new feature request arrives, the Tech Lead can quickly assess complexity, identify risks, and suggest alternatives that achieve the same business outcome with less technical implementation effort.

Code Quality and Standards

A great tech lead sets and evolves coding standards, code review guidelines, branching strategies, and testing practices for the team. This includes defining minimum test coverage requirements, establishing CI rules that prevent broken builds from merging, and creating review checklists that ensure consistent code quality across the codebase.

Modern Tech Leads increasingly integrate AI code review tools into their workflows. Platforms like Typo can track code health over time, helping TLs identify trends in code quality, spot hotspots where defects cluster, and ensure that experienced developers and newcomers alike maintain consistent standards.

Technical Debt Management

Technical debt accumulates in every codebase. The Tech Lead’s job is to identify, quantify, and prioritize this debt in the product backlog, then negotiate with the product owner for consistent investment in paying it down. Many mature teams dedicate 10-20% of sprint capacity to technical debt reduction, infrastructure improvements, and automation.

Without a TL advocating for this work, technical debt tends to accumulate until it significantly slows feature development. The Tech Lead translates technical concerns into business terms that stakeholders can understand—explaining, for example, that addressing authentication debt now will reduce security incident risk and cut feature development time by 30% in Q3.

Security and Reliability

Tech Leads partner with SRE and Security teams to ensure secure-by-default patterns, resilient architectures, and alignment with operational SLIs and SLOs. They’re responsible for ensuring the team understands security best practices, that code reviews include security considerations, and that architectural choices support reliability goals.

This responsibility extends to incident response. When production issues occur, the Tech Lead often helps identify the root cause, coordinates the technical response, and ensures the team conducts blameless postmortems that lead to genuine improvements rather than blame.

Tech Leads are critical to turning product intent into working software within short iterations without burning out the team. While the development process can feel chaotic without clear technical guidance, a skilled TL creates the structure and clarity that enables consistent delivery.

Partnering with Product Owners / Product Managers

The Tech Lead works closely with the product owner during backlog refinement, helping to slice user stories into deliverable chunks, estimate technical complexity, and surface dependencies and risks early. When the Product Owner proposes a feature, the TL can quickly assess whether it’s feasible, identify technical prerequisites, and suggest acceptance criteria that ensure the implementation meets both business and technical requirements.

This partnership is collaborative, not adversarial. The Product Owner owns what gets built and in what priority; the Tech Lead ensures the team understands how to build it sustainably. Neither can write user stories effectively without input from the other.

Working with Scrum Masters / Agile Coaches

The scrum master role focuses on optimizing process and removing organizational impediments. The Tech Lead, by contrast, optimizes technical flow and removes engineering blockers. These responsibilities complement each other without overlapping.

In practice, this means the TL and Scrum Master collaborate during ceremonies. In sprint planning, the TL helps the team break down work technically while the Scrum Master ensures the process runs smoothly. In retrospectives, both surface different types of impediments—the Scrum Master might identify communication breakdowns while the Tech Lead highlights flaky tests slowing the agile process.

Sprint and Iteration Planning

The Tech Lead helps the team break down initiatives into deliverable slices, set realistic commitments based on team velocity, and avoid overcommitting. This requires understanding both the technical work involved and the team’s historical performance.

Effective TLs push back when plans are unrealistic. If leadership wants to hit an aggressive sprint goal, the Tech Lead can present data showing that the team’s average velocity makes the commitment unlikely, then propose alternatives that balance ambition with sustainability.

Cross-Functional Collaboration

Modern software development requires collaboration across disciplines. The Tech Lead coordinates with the UX designer on technical constraints that affect interface decisions, works with Data teams on analytics integration, partners with Security on compliance requirements, and collaborates with Operations on deployment and monitoring.

For example, launching a new AI-based recommendation engine might involve the TL coordinating across multiple teams: working with Data Science on model integration, Platform on infrastructure scaling, Security on data privacy requirements, and Product on feature rollout strategy.

Stakeholder Communication

Tech Leads translate technical trade-offs into business language for engineering managers, product leaders, and sometimes customers. When a deadline is at risk, the TL can explain why in terms stakeholders understand—not “we have flaky integration tests” but “our current automation gaps mean we need an extra week to ship with confidence.”

This communication responsibility becomes especially critical under tight deadlines. The TL serves as a bridge between the team’s technical reality and stakeholder expectations, ensuring both sides have accurate information to make good decisions.

Effective Tech Leads are multipliers. Their main leverage comes from improving the whole team’s capability, not just their own individual contributor output. A TL who ships great code but doesn’t elevate team members is only half-effective.

Mentoring and Skill Development

Tech Leads provide structured mentorship for junior and mid-level developers on the team. This includes pair programming sessions on complex problems, design review discussions that teach architectural thinking, and creating learning plans for skill gaps the team needs to close.

Mentoring isn’t just about technical skills. TLs also help developers understand how to scope work effectively, how to communicate technical concepts to non-technical stakeholders, and how to navigate ambiguity in requirements.

Feedback and Coaching

Great TLs give actionable feedback constantly—on pull requests, design documents, incident postmortems, and day-to-day interactions. The goal is continuous improvement, not criticism. Feedback should be specific (“this function could be extracted for reusability”) rather than vague (“this code needs work”).

An agile coach might help with broader process improvements, but the Tech Lead provides the technical coaching that helps individual developers grow their engineering skills. This includes answering questions thoughtfully, explaining the “why” behind recommendations, and celebrating when team members demonstrate growth.

Enabling Ownership and Autonomy

A new tech lead often makes the mistake of trying to own too much personally. Mature TLs delegate ownership of components or features to other developers, empowering them to make decisions and learn from the results. The TL’s job is to create guardrails and provide guidance, not to become a gatekeeper for every change.

This means resisting the urge to be the hero coder who solves every hard problem. Instead, the TL should ask: “Who on the team could grow by owning this challenge?” and then provide the support they need to succeed.

Psychological Safety and Culture

The Tech Lead models the culture they want to create. This includes leading blameless postmortems where the focus is on systemic improvements rather than individual blame, maintaining a respectful tone in code reviews, and ensuring all team members feel included in technical discussions.

When a junior developer makes a mistake that causes an incident, the TL’s response sets the tone for the entire team. A blame-focused response creates fear; a learning-focused response creates safety. The best TLs use failures as opportunities to improve both systems and skills.

Team Health Signals

Modern Tech Leads use engineering intelligence tools to monitor signals that indicate team well-being. Metrics like PR review wait time, cycle time, interruption frequency, and on-call burden serve as proxies for how the team is actually doing.

Platforms like Typo can surface these signals automatically, helping TLs identify when a team builds toward burnout before it becomes a crisis. If one developer’s review wait times spike, it might indicate they’re overloaded. If cycle time increases across the board, it might signal technical debt or process problems slowing everyone down.

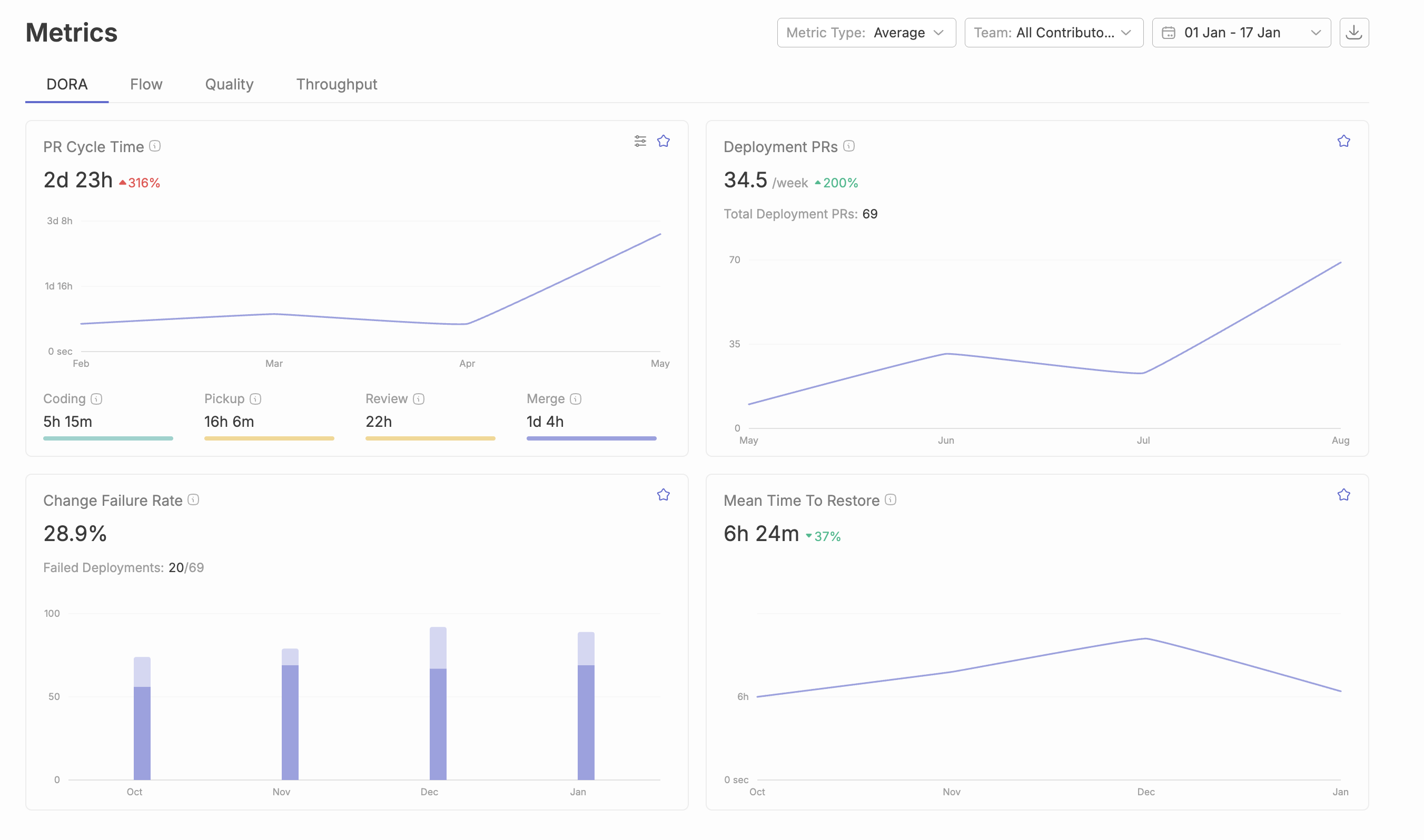

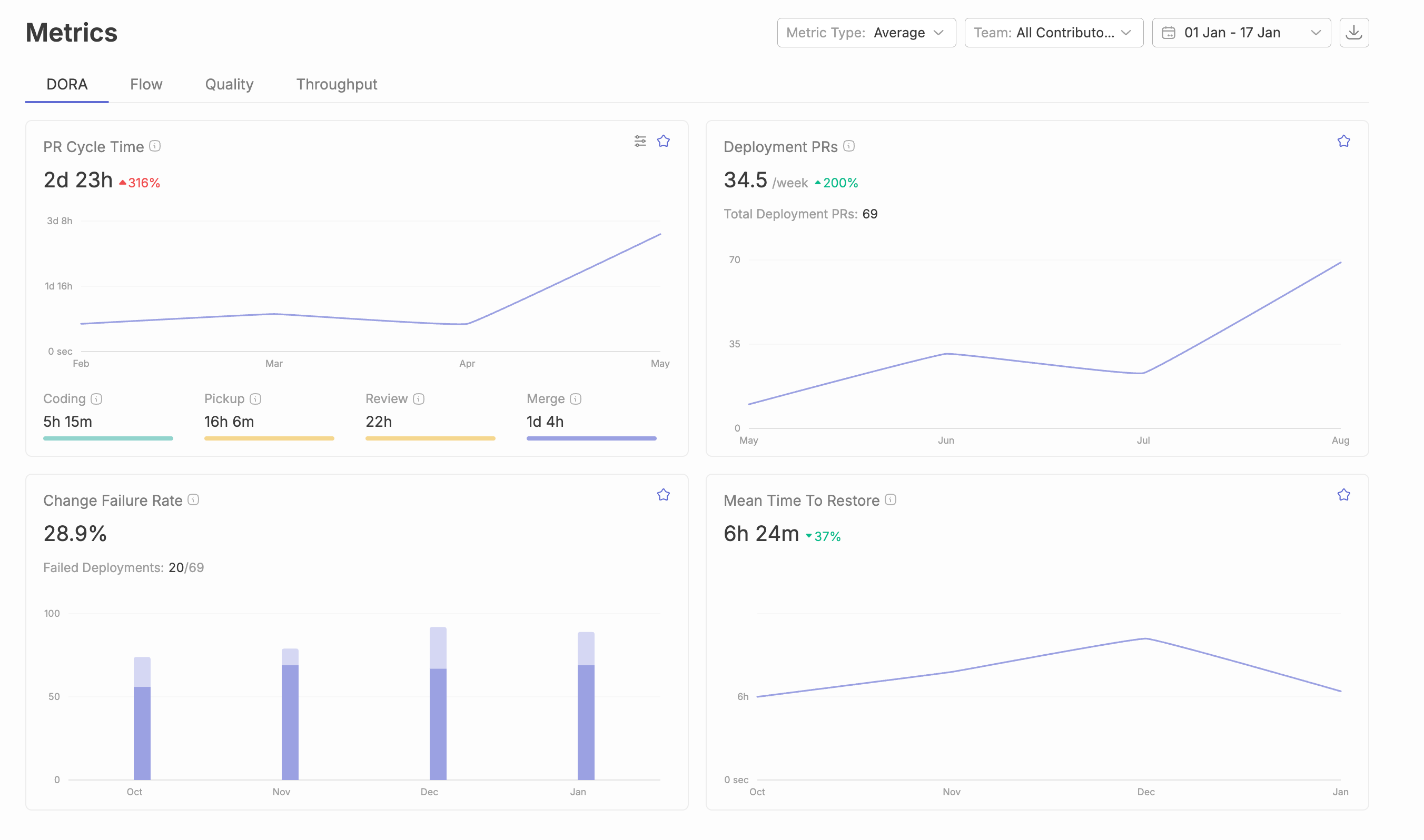

Modern Tech Leads increasingly rely on metrics to steer continuous improvement. This isn’t about micromanagement—it’s about having objective data to inform decisions, spot problems early, and demonstrate impact over time.

The shift toward data-driven technical leadership reflects a broader trend in engineering. Just as product teams use analytics to understand user behavior, engineering teams can use delivery and quality metrics to understand their own performance and identify opportunities for improvement.

Flow and Delivery Metrics

DORA metrics have become the standard for measuring software delivery performance:

Beyond DORA, classic SDLC metrics like cycle time (from work started to work completed), work-in-progress limits, and throughput help TLs understand where work gets stuck and how to improve flow.

Code-Level Metrics

Tech Leads should monitor practical signals that indicate code health:

These metrics help TLs make informed decisions about where to invest in code quality improvements. If one module shows high defect density and frequent changes, it’s a candidate for dedicated refactoring efforts.

Developer Experience Metrics

Engineering output depends on developer well-being. TLs should track:

These qualitative and quantitative signals help TLs understand friction in the development process that pure output metrics might miss.

How Typo Supports Tech Leads

Typo consolidates data from GitHub, GitLab, Jira, CI/CD pipelines, and AI coding tools to give Tech Leads real-time visibility into bottlenecks, quality issues, and the impact of changes. Instead of manually correlating data across tools, TLs can see the complete picture in one place.

Specific use cases include:

Data-Informed Coaching

Armed with these insights, Tech Leads can make 1:1s and retrospectives more productive. Instead of relying on gut feel, they can point to specific data: “Our cycle time increased 40% last sprint—let’s dig into why” or “PR review latency has dropped since we added a second reviewer—great job, team.”

This data-informed approach focuses conversations on systemic fixes—process, tooling, patterns—rather than blaming individuals. The goal is always continuous improvement, not surveillance.

Every Tech Lead wrestles with the classic tension: code enough to stay credible and informed, but lead enough to unblock and grow the team. There’s no universal formula, but there are patterns that help.

Time Allocation

Most Tech Leads find a 50/50 split between coding and leadership activities works as a baseline. In practice, this balance shifts constantly:

The key is intentionality. TLs should consciously decide where to invest time each week rather than just reacting to whatever’s urgent.

Avoiding Bottlenecks

Anti-patterns to watch for:

Healthy patterns include enabling multiple reviewers with merge authority, documenting decisions so other developers understand the rationale, and deliberately building shared ownership of complex systems.

Choosing What to Code

Not all coding work is equal for a Tech Lead. Prioritize:

Delegate straightforward tasks that provide good growth opportunities for other developers. The goal is maximum leverage, not maximum personal output.

Communication Rhythms

Daily and weekly practices help TLs stay connected without micromanaging:

These rhythms create structure without requiring the TL to be in every conversation.

Personal Sustainability

Learn how DORA metrics can help improve software delivery performance and efficiency.

Tech Leads wear many hats, and it’s easy to burn out. Protect yourself by:

A burned-out TL can’t effectively lead. Sustainable pace matters for the person in the role, not just the team they lead.

The Tech Lead role looks different at a 10-person startup versus a 500-person engineering organization. Understanding how responsibilities evolve helps TLs grow their careers and helps leaders build effective high performing teams at scale.

From Single-Team TL to Area/Tribe Lead

As organizations grow, some Tech Leads transition from leading a single squad to coordinating multiple teams. This shift involves:

For example, a TL who led a single payments team might become a “Technical Area Lead” responsible for the entire payments domain, coordinating three squads with their own TLs.

Interaction with Staff/Principal Engineers

In larger organizations, Staff and Principal Engineers define cross-team architecture and long-term technical vision. Tech Leads collaborate with these senior ICs, implementing their guidance within their teams while providing ground-level feedback on what’s working and what isn’t.

This relationship should be collaborative, not hierarchical. The Staff Engineer brings breadth of vision; the Tech Lead brings depth of context about their specific team and domain.

Governance and Standards: Learn how integrating development tools can support governance and enforce engineering standards across your team.

As organizations scale, governance structures emerge to maintain consistency:

Tech Leads participate in and contribute to these forums, representing their team’s perspective while aligning with broader organizational direction.

Hiring and Onboarding

Tech Leads typically get involved in hiring:

Once new engineers join, the TL leads their technical onboarding—introducing them to the tech stack, codebase conventions, development practices, and ongoing projects.

Measuring Maturity

TLs can track improvement over quarters using engineering analytics. Trend lines for cycle time, defect rate, and deployment frequency show whether leadership decisions are paying off. If cycle time drops 25% over two quarters after implementing PR size limits, that’s concrete evidence of effective technical leadership.

For example, when spinning up a new AI feature squad in 2025, an organization might assign an experienced TL, then track metrics from day one to measure how quickly the team reaches productive velocity compared to previous team launches.

Tech Leads need clear visibility into delivery, quality, and developer experience to make better decisions. Without data, they’re operating on intuition and incomplete information. Typo provides the view that transforms guesswork into confident leadership.

SDLC Visibility

Typo connects Git, CI, and issue trackers to give Tech Leads end-to-end visibility from ticket to deployment. You can see where work is stuck—whether it’s waiting for code review, blocked by failed tests, or sitting in a deployment queue. This visibility helps TLs intervene early before small delays become major blockers.

AI Code Impact and Code Reviews

As teams adopt AI coding tools like Copilot, questions arise about impact on quality. Typo can highlight how AI-generated code affects defects, review time, and rework rates. This helps TLs tune their team’s practices—perhaps AI-generated code needs additional review scrutiny, or perhaps it’s actually reducing defects in certain areas.

Delivery Forecasting

Stop promising dates based on optimism. Typo’s delivery signals help Tech Leads provide more reliable timelines to Product and Leadership based on historical performance data. When asked “when will this epic ship?”, you can answer with confidence rooted in your team’s actual velocity.

Developer Experience Insights

Developer surveys and behavioral signals help TLs understand burnout risks, onboarding friction, and process pain points. If new engineers are taking twice as long as expected to reach full productivity, that’s a signal to invest in better documentation or mentoring practices.

If you’re a Tech Lead or engineering leader looking to improve your team’s delivery speed and quality, Typo can give you the visibility you need. Start a free trial to see how engineering analytics can amplify your technical leadership—or book a demo to explore how Typo fits your team’s specific needs.

The tech lead role sits at the intersection of deep technical expertise and team leadership. In agile environments, this means balancing hands-on engineering with mentoring, architecture with collaboration, and personal contribution with team multiplication.

With clear responsibilities, the right practices, and data-driven visibility into delivery and quality, Tech Leads become the force multipliers that turn engineering strategy into shipped software. The teams that invest in strong technical leadership—and give their TLs the tools to see what’s actually happening—consistently outperform those that don’t.

Generative AI for engineering represents a fundamental shift in how engineers approach code development, system design, and technical problem-solving. Unlike traditional automation tools that follow predefined rules, generative AI tools leverage large language models to create original code snippets, design solutions, and technical documentation from natural language prompts. This technology is transforming software development and engineering workflows across disciplines, enabling teams to generate code, automate repetitive tasks, and accelerate delivery cycles at unprecedented scale.

Key features such as AI assistant and AI chat are now central to these tools, helping automate and streamline coding and problem-solving tasks. AI assistants can improve productivity by offering modular code solutions, while AI chat enables conversational, inline assistance for debugging, code refactoring, and interactive query resolution.

This guide covers generative AI applications across software engineering, mechanical design, electrical systems, civil engineering, and cross-disciplinary implementations. The content is designed for engineering leaders, development teams, and technical professionals seeking to understand how AI coding tools integrate with existing workflows and improve developer productivity. Many AI coding assistants integrate with popular IDEs to streamline the development process. Whether you’re evaluating your first AI coding assistant or scaling enterprise-wide adoption, this resource provides practical frameworks for implementation and measurement.

What is generative AI for engineering?

It encompasses AI systems that create functional code, designs, documentation, and engineering solutions from natural language prompts and technical requirements—serving as a collaborative partner that handles execution while engineers focus on strategic direction and complex problem-solving. AI coding assistants can be beneficial for both experienced developers and those new to programming.

By the end of this guide, you will understand:

Generative AI can boost coding productivity by up to 55%, and developers can complete tasks up to twice as fast with generative AI assistance.

Generative AI refers to artificial intelligence systems that create new content—code, designs, text, or other outputs—based on patterns learned from training data. Generative AI models are built using machine learning techniques and are often trained on publicly available code, enabling them to generate relevant and efficient code snippets. For engineering teams, this means AI models that understand programming languages, engineering principles, and technical documentation well enough to generate accurate code suggestions, complete functions, and solve complex programming tasks through natural language interaction.

The distinction from traditional engineering automation is significant. Conventional tools execute predefined scripts or follow rule-based logic. Generative AI tools interpret context, understand intent, and produce original solutions. Most AI coding tools support many programming languages, making them versatile for different engineering teams. When you describe a problem in plain English, these AI systems generate code based on that description, adapting to your project context and coding patterns.

Artificial intelligence (AI) is a broad field dedicated to building systems that can perform tasks typically requiring human intelligence, such as learning, reasoning, and decision-making. Within this expansive domain, generative AI stands out as a specialized subset focused on creating new content—whether that’s text, images, or, crucially for engineers, code.

Generative AI tools leverage advanced machine learning techniques and large language models to generate code snippets, automate code refactoring, and enhance code quality based on natural language prompts or technical requirements. While traditional AI might classify data or make predictions, generative AI goes a step further by producing original outputs that can be directly integrated into the software development process.

In practical terms, this means that generative AI can generate code, suggest improvements, and even automate documentation, all by understanding the context and intent behind a developer’s request. The relationship between AI and generative AI is thus one of hierarchy: generative AI is a powerful application of artificial intelligence, using the latest advances in large language models and machine learning to transform how engineers and developers approach code generation and software development.

In software development, generative AI applications have achieved immediate practical impact. AI coding tools now generate code, perform code refactoring, and provide intelligent suggestions directly within integrated development environments like Visual Studio Code. These tools help developers write code more efficiently by offering relevant suggestions and real-time feedback as they work. These capabilities extend across multiple programming languages, from Python code to JavaScript, Java, and beyond.

The integration with software development process tools creates compounding benefits. When generative AI connects with engineering analytics platforms, teams gain visibility into how AI-generated code affects delivery metrics, code quality, and technical debt accumulation. AI coding tools can also automate documentation generation, enhancing code maintainability and reducing manual effort. This connection between code generation and engineering intelligence enables data-driven decisions about AI tool adoption and optimization.

Modern AI coding assistant implementations go beyond simple code completion. They analyze pull requests, suggest bug fixes, identify security vulnerabilities, and recommend code optimization strategies. These assistants help with error detection and can analyze complex functions within code to improve quality and maintainability. Some AI coding assistants, such as Codex, can operate within secure, sandboxed environments without requiring internet access, which enhances safety and security for sensitive projects. Developers can use AI tools by following prompt-based workflows to generate code snippets in many programming languages, streamlining the process of writing and managing code. The shift is from manual coding process execution to AI-augmented development where engineers direct and refine rather than write every line.

AI coding tools can integrate with popular IDEs to streamline the development workflow, making it easier for teams to adopt and benefit from these technologies. Generative AI is transforming the process of developing software by automating and optimizing various stages of the software development lifecycle.

Beyond software, generative AI transforms how engineers approach CAD model generation, structural analysis, and product design. Rather than manually iterating through design variations, engineers can describe requirements in natural language and receive generated design alternatives that meet specified constraints.

This capability accelerates the design cycle significantly. Where traditional design workflows required engineers to manually model each iteration, AI systems now generate multiple viable options for human evaluation. The engineer’s role shifts toward defining requirements clearly, evaluating AI-generated options critically, and applying human expertise to select and refine optimal solutions.

Technical documentation represents one of the highest-impact applications for generative AI in engineering. AI systems now generate specification documents, API documentation, and knowledge base articles from code analysis and natural language prompts. This automation addresses a persistent bottleneck—documentation that lags behind code development.

The knowledge extraction capabilities extend to existing codebases. AI tools analyze code to generate explanatory documentation, identify undocumented dependencies, and create onboarding materials for new team members. This represents a shift from documentation as afterthought to documentation as automated, continuously updated output.

These foundational capabilities—code generation, design automation, and documentation—provide the building blocks for discipline-specific applications across engineering domains.

Generative AI is rapidly transforming engineering by streamlining the software development process, boosting productivity, and elevating code quality. By integrating generative ai tools into their workflows, engineers can automate repetitive tasks such as code formatting, code optimization, and documentation, freeing up time for more complex and creative problem-solving.

One of the standout benefits is the ability to receive accurate code suggestions in real time, which not only accelerates development but also helps maintain high code quality standards. Generative AI tools can proactively detect security vulnerabilities and provide actionable feedback, reducing the risk of costly errors. As a result, teams can focus on innovation and strategic initiatives, while the AI handles routine aspects of the development process. This shift leads to more efficient, secure, and maintainable software, ultimately driving better outcomes for engineering organizations.

Generative AI dramatically enhances productivity and efficiency in software development by automating time-consuming tasks such as code completion, code refactoring, and bug fixes. AI coding assistants like GitHub Copilot and Tabnine deliver real-time code suggestions, allowing developers to write code faster and with fewer errors. These generative ai tools can also automate testing and validation, ensuring that code meets quality standards before it’s deployed.

By streamlining the coding process and reducing manual effort, generative AI enables developers to focus on higher-level design and problem-solving. The result is a more efficient development process, faster delivery cycles, and improved code quality across projects.

Generative AI is not just about automation—it’s also a catalyst for innovation and creativity in software development. By generating new code snippets and suggesting alternative solutions to complex challenges, generative ai tools empower developers to explore fresh ideas and approaches they might not have considered otherwise.

These tools can also help developers experiment with new programming languages and frameworks, broadening their technical expertise and encouraging continuous learning. By providing a steady stream of creative input and relevant suggestions, generative AI fosters a culture of experimentation and growth, driving both individual and team innovation.

Building on these foundational capabilities, generative AI manifests differently across engineering specializations. Each discipline leverages the core technology—large language models processing natural language prompts to generate relevant output—but applies it to domain-specific challenges and workflows.

Software developers experience the most direct impact from generative AI adoption. AI-powered code reviews now identify issues that human reviewers might miss, analyzing code patterns across multiple files and flagging potential security vulnerabilities, error handling gaps, and performance concerns. These reviews happen automatically within CI/CD pipelines, providing feedback before code reaches production.

The integration with engineering intelligence platforms creates closed-loop improvement. When AI coding tools connect to delivery metrics systems, teams can measure how AI-generated code affects deployment frequency, lead time, and failure rates. This visibility enables continuous optimization of AI tool configuration and usage patterns.

Pull request analysis represents a specific high-value application. AI systems summarize changes, identify potential impacts on dependent systems, and suggest relevant reviewers based on code ownership patterns. For development teams managing high pull request volumes, this automation reduces review cycle time while improving coverage. Developer experience improves as engineers spend less time on administrative review tasks and more time on substantive technical discussion.

Automated testing benefits similarly from generative AI. AI systems generate test plans based on code changes, identify gaps in test coverage, and suggest test cases that exercise edge conditions. This capability for improving test coverage addresses a persistent challenge—comprehensive testing that keeps pace with rapid development.

Adopting generative AI tools in software development can dramatically boost coding efficiency, accelerate code generation, and enhance developer productivity. However, to fully realize these benefits and avoid common pitfalls, it’s essential to follow a set of best practices tailored to the unique capabilities and challenges of AI-powered development.

Before integrating generative AI into your workflow, establish clear goals for what you want to achieve—whether it’s faster code generation, improved code quality, or automating repetitive programming tasks. Well-defined objectives help you select the right AI tool and measure its impact on your software development process.

Select generative AI tools that align with your project’s requirements and support your preferred programming languages. Consider factors such as compatibility with code editors like Visual Studio Code, the accuracy of code suggestions, and the tool’s ability to integrate with your existing development environment. Evaluate whether the AI tool offers features like code formatting, code refactoring, and support for multiple programming languages to maximize its utility.

The effectiveness of AI models depends heavily on the quality of their training data. Ensure that your AI coding assistant is trained on relevant, accurate, and up

Engineering teams implementing generative AI encounter predictable challenges. Addressing these proactively improves adoption success and long-term value realization.

AI-generated code, while often functional, can introduce subtle quality issues that accumulate into technical debt. The solution combines automated quality gates with enhanced visibility.

Integrate AI code review tools that specifically analyze AI-generated code against your organization’s quality standards. Platforms providing engineering analytics should track technical debt metrics before and after AI tool adoption, enabling early detection of quality degradation. Establish human review requirements for all AI-generated code affecting critical systems or security-sensitive components.

Seamless workflow integration determines whether teams actively use AI tools or abandon them after initial experimentation.

Select tools with native integration for your Git workflows, CI/CD pipelines, and project management systems. Avoid tools requiring engineers to context-switch between their primary development environment and separate AI interfaces. The best AI tools embed directly where developers work—within VS Code, within pull request interfaces, within documentation platforms—rather than requiring separate application access.

Measure adoption through actual usage data rather than license counts. Engineering intelligence platforms can track AI tool engagement alongside traditional productivity metrics, identifying integration friction points that reduce adoption.

Technical implementation succeeds or fails based on team adoption. Engineers accustomed to writing code directly may resist AI-assisted approaches, particularly if they perceive AI tools as threatening their expertise or autonomy.

Address this through transparency about AI’s role as augmentation rather than replacement. Share data showing how AI handles repetitive tasks while freeing engineers for complex problem-solving requiring critical thinking and human expertise. Celebrate examples where AI-assisted development produced better outcomes faster.

Measure developer experience impacts directly. Survey teams on satisfaction with AI tools, identify pain points, and address them promptly. Track whether AI adoption correlates with improved or degraded engineering velocity and quality metrics.

The adoption challenge connects directly to the broader organizational transformation that generative AI enables, including the integration of development experience tools.

Generative AI is revolutionizing the code review process by delivering automated, intelligent feedback powered by large language models and machine learning. Generative ai tools can analyze code for quality, security vulnerabilities, and performance issues, providing developers with real-time suggestions and actionable insights.

This AI-driven approach ensures that code reviews are thorough and consistent, catching issues that might be missed in manual reviews. By automating much of the review process, generative AI not only improves code quality but also accelerates the development workflow, allowing teams to deliver robust, secure software more efficiently. As a result, organizations benefit from higher-quality codebases and reduced risk, all while freeing up developers to focus on more strategic tasks.

Generative AI for engineering represents not a future possibility but a present reality reshaping how engineering teams operate. The technology has matured from experimental capability to production infrastructure, with mature organizations treating prompt engineering and AI integration as core competencies rather than optional enhancements.

The most successful implementations share common characteristics: clear baseline metrics enabling impact measurement, deliberate pilot programs generating organizational learning, quality gates ensuring AI augments rather than degrades engineering standards, and continuous improvement processes optimizing tool usage over time.

To begin your generative AI implementation:

For organizations seeking deeper understanding, related topics warrant exploration: DORA metrics frameworks for measuring engineering effectiveness, developer productivity measurement approaches, and methodologies for tracking AI impact on engineering outcomes over time.

Engineering metrics frameworks for measuring AI impact:

Integration considerations for popular engineering tools:

Key capabilities to evaluate in AI coding tools: For developers and teams focused on optimizing software delivery, it's also valuable to explore the best CI/CD tools.

The productivity of software is under more scrutiny than ever. After the 2022–2024 downturn, CTOs and VPs of Engineering face constant scrutiny from CEOs and CFOs demanding proof that engineering spend translates into real business value. This article is for engineering leaders, managers, and teams seeking to understand and improve the productivity of software development. Understanding software productivity is critical for aligning engineering efforts with business outcomes in today's competitive landscape. The question isn’t whether your team is busy—it’s whether the productivity of software your organization produces actually moves the needle.

Measuring developer productivity is a complex process that goes far beyond simple output metrics. Developer productivity is closely linked to the overall success of software development teams and the viability of the business.

This article answers how to measure and improve software productivity using concrete frameworks like DORA metrics, SPACE, and DevEx, while accounting for the AI transformation reshaping how developers work. Many organizations, including leading tech companies such as Facebook, Meta, and Uber, struggle to connect the creative and collaborative work of software developers to tangible business outcomes. We’ll focus on team-level and system-level productivity, tying software delivery directly to business outcomes like feature throughput, reliability, and revenue impact. Throughout, we’ll show how engineering intelligence platforms like Typo help mid-market and enterprise teams unify SDLC data and surface real-time productivity signals.

As an example of how industry leaders are addressing these challenges, Microsoft created the Developer Velocity Assessment (DVI) tool to help organizations measure and improve developer productivity by focusing on internal processes, tools, culture, and talent management.

When we talk about productivity of software, we’re not counting keystrokes or commits. We’re asking: how effectively does an engineering org convert time, tools, and talent into reliable, high-impact software in production?

This distinction matters because naive metrics create perverse incentives. Measuring developer productivity by lines of code rewards verbosity, not value. Senior engineering leaders learned this lesson decades ago, yet the instinct to count output persists.

Here’s a clearer way to think about it:

Naive Metrics vs. Outcome-Focused Metrics:

Productive software systems share common characteristics: fast feedback loops, low friction in the software development process, and stable, maintainable codebases. Software productivity is emergent from process, tooling, culture, and now AI assistance—not reducible to a single metric.

Understanding the value cycle transforms how engineering managers think about measuring productivity. Let’s walk through a concrete example.

Imagine a software development team at a B2B SaaS company shipping a usage-based billing feature targeted for Q3 2025. Here’s how value flows through the system:

Software developers are key contributors at each stage of the value cycle, and their productivity should be measured in terms of meaningful outcomes and impact, not just effort or raw output.

Effort Stage:

Output Stage:

Outcome Stage:

Impact Stage:

Measuring productivity of software means instrumenting each stage—but decision-making should prioritize outcomes and impact. Your team can ship 100 features that nobody uses, and that’s not productivity—that’s waste.

Typo connects these layers by correlating SDLC events (PRs, deployments, incidents) with delivery timelines and user-facing milestones. This lets engineering leaders track progress from code commit to business impact without building custom dashboards from scratch.

Effective measurement of developer productivity requires a balanced approach that includes both qualitative and quantitative metrics. Qualitative metrics provide insights into developer experience and satisfaction, while quantitative metrics capture measurable outputs such as deployment frequency and cycle time.

Every VP of Engineering has felt this frustration: the CEO asks for a simple metric showing whether engineering is “productive,” and there’s no honest, single answer.

Here’s why measuring productivity is uniquely difficult for software engineering teams:

The creativity factor makes output deceptive. A complex refactor or bug fix in 50 lines can be more valuable than adding 5,000 lines of new code. A developer who spends three days understanding a system failure before writing a single line may be the most productive developer that week. Traditional quantitative metrics miss this entirely.

Collaboration blurs individual contribution. Pair programming, architectural decisions, mentoring junior developers, and incident response often don’t show up cleanly in version control systems. The developer who enables developers across three teams to ship faster may have zero PRs that sprint.

Cross-team dependencies distort team-level metrics. In modern microservice and platform setups, the front-end team might be blocked for two weeks waiting on platform migrations. Their cycle time looks terrible, but the bottleneck lives elsewhere. System metrics without context mislead.

AI tools change the shape of output. With GitHub Copilot, Amazon CodeWhisperer, and internal LLMs, the relationship between effort and output is shifting. Fewer keystrokes produce more functionality. Output-only productivity measurement becomes misleading when AI tools influence productivity in ways raw commit counts can’t capture.

Naive metrics create gaming and fear. When individual developers know they’re ranked by PRs per week, they optimize for quantity over quality. The result is inflated PR counts, fragmented commits, and a culture where team members game the system instead of building software that matters.

Well-designed productivity metrics surface bottlenecks and enable healthier, more productive systems. Poorly designed ones destroy trust.

Several frameworks have emerged to help engineering teams measure development productivity without falling into the lines of code trap. Each captures something valuable—and each has blind spots. These frameworks aim to measure software engineering productivity by assessing efficiency, effectiveness, and impact across multiple dimensions.

DORA Metrics (2014–2021, State of DevOps Reports)

DORA metrics remain the gold standard for measuring delivery performance across software engineering organizations. The four key indicators:

Research shows elite performers—about 20% of surveyed organizations—deploy 208 times more frequently with 106 times faster lead times than low performers. DORA metrics measure delivery performance and stability, not individual performance.

Typo uses DORA-style metrics as baseline health indicators across repos and services, giving engineering leaders a starting point for understanding overall engineering productivity.

SPACE Framework (Microsoft/GitHub, 2021)

SPACE legitimized measuring developer experience and collaboration as core components of productivity. The five dimensions:

SPACE acknowledges that developer sentiment matters and that qualitative metrics belong alongside quantitative ones.

DX Core 4 Framework

The DX Core 4 framework unifies DORA, SPACE, and Developer Experience into four dimensions: speed, effectiveness, quality, and business impact. This approach provides a comprehensive view of software engineering productivity by integrating the strengths of each framework.

DevEx / Developer Experience

DevEx encompasses the tooling, process, documentation, and culture shaping day-to-day development work. Companies like Google, Microsoft, and Shopify now have dedicated engineering productivity or DevEx teams specifically focused on making developers work more effective. The Developer Experience Index (DXI) is a validated measure that captures key engineering performance drivers.

Key DevEx signals include build times, test reliability, deployment friction, code review turnaround, and documentation quality. When DevEx is poor, even talented teams struggle to ship.

Value Stream & Flow Metrics

Flow metrics help pinpoint where value gets stuck between idea and production:

High WIP correlates strongly with context switching and elongated cycle times. Teams juggling too many items dilute focus and slow delivery.

Typo combines elements of DORA, SPACE, and flow into a practical engineering intelligence layer—rather than forcing teams to choose one framework and ignore the others.

Before diving into effective measurement, let’s be clear about what destroys trust and distorts behavior.

Lines of code and commit counts reward noise, not value.

LOC and raw commit counts incentivize verbosity. A developer who deletes 10,000 lines of dead code improves system health and reduces tech debt—but “scores” negatively on LOC metrics. A developer who writes bloated, copy-pasted implementations looks like a star. This is backwards.

Per-developer output rankings create toxic dynamics.

Leaderboard dashboards ranking individual developers by PRs or story points damage team dynamics and encourage gaming. They also create legal and HR risks—bias and misuse concerns increasingly push organizations away from individual productivity scoring.

Ranking individual developers by output metrics is the fastest way to destroy the collaboration that makes the most productive teams effective.

Story points and velocity aren’t performance metrics.

Story points are a planning tool, helping teams forecast capacity. They were never designed as a proxy for business value or individual performance. When velocity gets tied to performance reviews, teams inflate estimates. A team “completing” 80 points per sprint instead of 40 isn’t twice as productive—they’ve just learned to game the system.

Time tracking and “100% utilization” undermine creative work.

Measuring keystrokes, active windows, or demanding 100% utilization treats software development like assembly line work. It undermines trust and reduces the creative problem-solving that building software requires. Sustainable software productivity requires slack for learning, design, and maintenance.

Single-metric obsession creates blind spots.

Optimizing only for deployment frequency while ignoring change failure rate leads to fast, broken releases. Obsessing over throughput while ignoring developer sentiment leads to burnout. Metrics measured in isolation mislead.

Here’s a practical playbook engineering leaders can follow to measure software developer productivity without falling into anti-patterns.

Start by clarifying objectives with executives.

Establish baseline SDLC visibility.

Layer on DORA and flow metrics.

Include developer experience signals.

Correlate engineering metrics with product and business outcomes.

Typo does most of this integration automatically, surfacing key delivery signals and DevEx trends so leaders can focus on decisions, not pipeline plumbing.

In the world of software development, the productivity of engineering teams hinges not just on tools and processes, but on the strength of collaboration and the human connections within the team. Measuring developer productivity goes far beyond tracking lines of code or counting pull requests; it requires a holistic view that recognizes the essential role of teamwork, communication, and shared ownership in the software development process.

Effective collaboration among team members is a cornerstone of high-performing software engineering teams. When developers work together seamlessly—sharing knowledge, reviewing code, and solving problems collectively—they drive better code quality, reduce technical debt, and accelerate the delivery of business value. The most productive teams are those that foster open communication, trust, and a sense of shared purpose, enabling each individual to contribute their best work while supporting the success of the entire team.

To accurately measure software developer productivity, engineering leaders must look beyond traditional quantitative metrics. While DORA metrics such as deployment frequency, lead time, and change failure rate provide valuable insights into the development process, they only tell part of the story. Complementing these with qualitative metrics—like developer sentiment, team performance, and self-reported data—offers a more complete picture of productivity outcomes. Qualitative metrics provide insights into developer experience and satisfaction, while quantitative metrics capture measurable outputs such as deployment frequency and cycle time. For example, regular feedback surveys can surface hidden bottlenecks, highlight areas for improvement, and reveal how team members feel about their work environment and the development process.

Engineering managers play a pivotal role in influencing productivity by creating an environment that empowers developers. This means providing the right tools, removing obstacles, and supporting continuous improvement. Prioritizing developer experience and well-being not only improves overall engineering productivity but also reduces turnover and increases the business value delivered by the software development team.

Balancing individual performance with team collaboration is key. While it’s important to recognize and reward outstanding contributions, the most productive teams are those where success is shared and collective ownership is encouraged. By tracking both quantitative metrics (like deployment frequency and lead time) and qualitative insights (such as code quality and developer sentiment), organizations can make data-driven decisions to optimize their development process and drive better business outcomes.

Self-reported data from developers is especially valuable for understanding the human side of productivity. By regularly collecting feedback and analyzing sentiment, engineering leaders can identify pain points, address challenges, and create a more positive and productive work environment. This human-centered approach not only improves developer satisfaction but also leads to higher quality software and more successful business outcomes.

Ultimately, fostering a culture of collaboration, open communication, and continuous improvement is essential for unlocking the full potential of engineering teams. By valuing the human factor in productivity and leveraging both quantitative and qualitative metrics, organizations can build more productive teams, deliver greater business value, and stay competitive in the fast-paced world of software development.

The 2023–2026 AI inflection—driven by Copilot, Claude, and internal LLMs—is fundamentally changing what software developer productivity looks like. Engineering leaders need new approaches to understand AI’s impact.

How AI coding tools change observable behavior:

Practical AI impact metrics to track:

Keep AI metrics team-level, not individual.

Avoid attaching “AI bonus” scoring or rankings to individual developers. The goal is understanding system improvements and establishing guardrails—not creating new leaderboards.

Concrete example: A team introducing Copilot in 2024

One engineering team tracked their AI tool adoption through Typo after introducing Copilot. They observed 15–20% faster cycle times within the first quarter. However, code quality signals initially dipped—more PRs required multiple review rounds, and change failure rate crept up 3%.

The team responded by introducing additional static analysis rules and AI-specific code review guidelines. Within two months, quality stabilized while throughput gains held. This is the pattern: AI tools can dramatically improve developer velocity, but only when paired with quality guardrails.

Typo tracks AI-related signals—PRs with AI review suggestions, patterns in AI-assisted changes—and correlates them with delivery and quality over time.

Understanding metrics is step one. Actually improving the productivity of software requires targeted interventions tied back to those metrics. To improve developer productivity, organizations should adopt strategies and frameworks—such as flow metrics and holistic approaches—that systematically enhance engineering efficiency.

Reduce cycle time by fixing review and CI bottlenecks.

Invest in platform engineering and internal tooling.

Systematically manage technical debt.

Improve documentation and knowledge sharing.

Streamline processes and workflows.

Protect focus time and reduce interruption load.

Typo validates which interventions move the needle by comparing before/after trends in cycle time, DORA metrics, DevEx scores, and incident rates. Continuous improvement requires closing the feedback loop between action and measurement.

Software is produced by teams, not isolated individuals. Architecture decisions, code reviews, pair programming, and on-call rotations blur individual ownership of output. Trying to measure individual performance through system metrics creates more problems than it solves. Measuring and improving the team's productivity is essential for enhancing overall team performance and identifying opportunities for continuous improvement.

Focus measurement at the squad or stream-aligned team level:

How managers can use team-level data effectively:

The entire team succeeds or struggles together. Metrics should reflect that reality.

Typo’s dashboards are intentionally oriented around teams, repos, and services—helping leaders avoid the per-engineer ranking traps that damage trust and distort behavior.

Typo is an AI-powered engineering intelligence platform designed to make productivity measurement practical, not theoretical.

Unified SDLC visibility:

Real-time delivery and quality signals:

AI-based code review and delivery insights:

Developer experience and AI impact capabilities:

Typo exists to help engineering leaders answer the question: “Is our software development team getting more effective over time, and where should we invest next?”

Ready to see your SDLC data unified? Start Free Trial, Book a Demo, or join a live demo to see Typo in action.

Here’s a concrete roadmap to operationalize everything in this article.

Sustainable productivity of software is about building a measurable, continuously improving system—not surveilling individuals. The goal is enabling engineering teams to ship faster, with higher quality, and with less friction. Typo exists to make that shift easier and faster.

Start your free trial today to see how your engineering organization’s productivity signals compare—and where you can improve next.

SPACE metrics are a multi-dimensional measurement framework that evaluates developer productivity through developer satisfaction surveys, performance outcomes, developer activity tracking, communication and collaboration metrics, and workflow efficiency—providing engineering leaders with actionable insights across the entire development process.

Space metrics provide a holistic view of developer productivity by measuring software development teams across five interconnected dimensions: Satisfaction and Well-being, Performance, Activity, Communication and Collaboration, and Efficiency and Flow. This comprehensive space framework moves beyond traditional metrics to capture what actually drives sustainable engineering excellence. In addition to tracking metrics at the individual, team, and organizational levels, space metrics can also be measured at the engineering systems level, providing a more comprehensive evaluation of developer efficiency and productivity.

This guide covers everything from foundational space framework concepts to advanced implementation strategies for engineering teams ranging from 10 to 500+ developers. Whether you’re an engineering leader seeking to improve developer productivity, a VP of Engineering building data-driven culture, or a development manager looking to optimize team performance, you’ll find actionable insights that go far beyond counting lines of code or commit frequency. The space framework offers a research-backed approach that acknowledges the complete picture of how software developers actually work and thrive.

High levels of developer satisfaction contribute to employee motivation and creativity, leading to better overall productivity. Unhappy developers tend to become less productive before they leave their jobs.

Key outcomes you’ll gain from this guide:

Understanding and implementing space metrics is essential for building high-performing, resilient software teams in today's fast-paced development environments.

The SPACE framework measures developer productivity across five key dimensions: Satisfaction and well-being, Performance, Activity, Communication and collaboration, and Efficiency and flow. The SPACE framework is a research-backed method for measuring software engineering team effectiveness across these five key dimensions. The five dimensions of the SPACE framework are designed to help teams understand the factors influencing their productivity and use better strategies to improve it. SPACE metrics encourage a balanced approach to measuring productivity, considering both technical output and human factors. SPACE metrics provide a holistic view of developer productivity by considering both technical output and human factors.

The SPACE framework is a comprehensive, research-backed approach to measuring developer productivity. It was developed by researchers at GitHub, Microsoft, and the University of Victoria to address the shortcomings of traditional productivity metrics. The framework evaluates software development teams across five key dimensions:

Traditional productivity metrics like lines of code, commit count, and hours logged create fundamental problems for software development teams. They’re easily gamed, fail to capture code quality, and often reward behaviors that harm long-term team productivity. For a better understanding of measuring developer productivity effectively, it is helpful to consider both quantitative and qualitative factors.

Velocity-only measurements prove particularly problematic. Teams that optimize solely for story points frequently sacrifice high quality code, skip knowledge sharing, and accumulate technical debt that eventually slows the entire development process.

The SPACE framework addresses these limitations by incorporating both quantitative system data and qualitative insights gained from developer satisfaction surveys. This dual approach captures both what’s happening and why it matters, providing a more complete picture of team health and productivity.

For modern software development teams using AI coding tools, distributed workflows, and complex collaboration tools, space metrics have become essential. They provide the relevant metrics needed to understand how development tools, team meetings, and work life balance interact to influence developer productivity.

The space framework operates on three foundational principles that distinguish it from traditional metrics approaches.

First, balanced measurement across individual, team, and organizational levels ensures that improving one area doesn’t inadvertently harm another. A developer achieving high output through unsustainable hours will show warning signs in satisfaction metrics before burning out.

Second, the framework mandates combining quantitative data collection (deployment frequency, cycle time, pull requests merged) with qualitative insights (developer satisfaction surveys, psychological safety assessments). This dual approach captures both what’s happening and why it matters.

Third, the framework focuses on business outcomes and value delivery rather than just activity metrics. High commit frequency means nothing if those commits don’t contribute to customer satisfaction or business objectives.

The space framework explicitly addresses the limitations of traditional metrics by incorporating developer well being, communication and collaboration quality, and flow metrics alongside performance metrics. This complete picture reveals whether productivity gains are sustainable or whether teams are heading toward burnout.

The transition from traditional metrics to space framework measurement represents a shift from asking “how much did we produce?” to asking “how effectively and sustainably are we delivering value?”

Each dimension of the space framework reveals different aspects of team performance and developer experience. Successful engineering teams measure across at least three dimensions simultaneously—using fewer creates blind spots that undermine the holistic view the framework provides.

Developer satisfaction directly correlates with sustainable productivity. This dimension captures employee satisfaction through multiple measurement approaches: quarterly developer experience surveys, work life balance assessments, psychological safety ratings, and burnout risk indicators.

Specific measurement examples include eNPS (employee Net Promoter Score), retention rates, job satisfaction ratings, and developer happiness indices. These metrics reveal whether your development teams can maintain their current pace or are heading toward unsustainable stress levels.

Research shows a clear correlation: when developer satisfaction increases from 6/10 to 8/10, productivity typically improves by 20%. This happens because satisfied software developers engage more deeply with problems, collaborate more effectively, and maintain the focus needed to produce high quality code.

Performance metrics focus on business outcomes rather than just activity volume. Key metrics include feature delivery success rate, customer satisfaction scores, defect escape rate, and system reliability indicators.

Technical performance indicators within this dimension include change failure rate, mean time to recovery (MTTR), and code quality scores from static analysis. These performance metrics connect directly to software delivery performance and business objectives.

Importantly, this dimension distinguishes between individual contributor performance and team-level outcomes. The framework emphasizes team performance because software development is inherently collaborative—individual heroics often mask systemic problems.

Activity metrics track the volume and patterns of development work: pull requests opened and merged, code review participation, release cadence, and documentation contributions.

This dimension also captures collaboration activities like knowledge sharing sessions, cross-team coordination, and onboarding effectiveness. These activities often go unmeasured but significantly influence developer productivity across the organization.

Critical warning: Activity metrics should never be used for individual performance evaluation. Using pull request counts to rank software developers creates perverse incentives that harm code quality and team collaboration. Activity metrics reveal team-level patterns—they identify bottlenecks and workflow issues, not individual performance problems.

Communication and collaboration metrics measure how effectively information flows through development teams. Key indicators include code review response times, team meetings efficiency ratings, and cross-functional project success rates.

Network analysis metrics within this dimension identify knowledge silos, measure team connectivity, and assess onboarding effectiveness. These collaboration metrics reveal whether new tools or process changes are actually improving how software development teams work together.

The focus here is quality of interactions rather than quantity. Excessive team meetings that interrupt flow and complete work patterns indicate problems, even if “collaboration” appears high by simple counting measures.

Efficiency and flow metrics capture how smoothly work moves from idea to production. Core measurements include cycle time from commit to deployment, deployment frequency, and software delivery pipeline efficiency.

Developer experience factors in this dimension include build success rates, test execution time, and environment setup speed. Long build times or flaky tests create constant interruptions that prevent developers from maintaining flow and complete work patterns.

Flow state indicators—focus time blocks, interruption patterns, context-switching frequency—reveal whether software developers have the minimal interruptions needed for deep work. High activity with low flow efficiency signals that productivity tools and processes need attention.

Code quality and code reviews are foundational to high-performing software development teams and are central to measuring and improving developer productivity within the SPACE framework. High code quality not only ensures reliable, maintainable software but also directly influences developer satisfaction, team performance, and the overall efficiency of the development process.

The SPACE framework recognizes that code quality is not just a technical concern—it’s a key driver of developer well being, collaboration, and business outcomes. By tracking key metrics related to code reviews and code quality, engineering leaders gain actionable insights into how their teams are working, where bottlenecks exist, and how to foster a culture of continuous improvement.

Implementing space metrics typically requires 3-6 months for full rollout, with significant investment in leadership alignment and cultural change. Engineering leaders should expect to dedicate 15-20% of a senior team member’s time during the initial implementation phases.

The process requires more than just new tools—it requires educating team members about why tracking metrics matters and how the data will be used to support rather than evaluate them.

Selecting the right tools determines whether tracking space metrics becomes sustainable or burdensome.

For most engineering teams, platforms that consolidate software development lifecycle data provide the fastest path to comprehensive space framework measurement. These platforms can analyze trends across multiple dimensions while connecting to your existing project management and collaboration tools.

Survey-based data collection often fails when teams feel over-surveyed or see no value from participation.

Start with passive metrics from existing tools before introducing any surveys—this builds trust that the data actually drives improvements. Keep initial surveys to 3-5 questions with a clear value proposition explaining how insights gained will help the team.

Share survey insights back to teams within two weeks of collection. When developers see their feedback leading to concrete changes, response rates increase significantly. Rotate survey focus areas quarterly to maintain engagement and prevent question fatigue.

The most common failure mode for space metrics occurs when managers use team-level data to evaluate individual software developers—destroying the psychological safety the framework requires.

Establish clear policies prohibiting individual evaluation using SPACE metrics from day one. Educate team members and leadership on why team-level insights focus is essential for honest self-reporting. Create aggregated reporting that prevents individual developer identification, and implement metric access controls limiting who can see individual-level system data.

When different dimensions tell different stories—high activity but low satisfaction, strong performance but poor flow metrics—teams often become confused about what to prioritize.

Treat metric conflicts as valuable insights rather than measurement failures. High activity combined with low developer satisfaction typically signals potential burnout. Strong performance metrics alongside poor efficiency and flow often indicates unsustainable heroics masking process problems.

Use correlation analysis to identify bottlenecks and root causes. Focus on trend analysis over point-in-time snapshots, and implement regular team retrospectives to discuss metric insights and improvement actions.

Some teams measure diligently for months without seeing meaningful improvements in developer productivity.

First, verify you’re measuring leading indicators (process metrics) rather than only lagging indicators (outcome metrics). Leading indicators enable faster course correction.

Ensure improvement initiatives target root causes identified through metric analysis rather than symptoms. Account for external factors—organizational changes, technology migrations, market pressures—that may mask improvement. Celebrate incremental wins and maintain a continuous improvement perspective; sustainable change takes quarters, not weeks.

Space metrics provide engineering leaders with comprehensive insights into software developer performance that traditional output metrics simply cannot capture. By measuring across satisfaction and well being, performance, activity, communication and collaboration, and efficiency and flow, you gain the complete picture needed to improve developer productivity sustainably.

The space framework offers something traditional metrics never could: a balanced view that treats developers as whole people whose job satisfaction and work life balance directly impact their ability to produce high quality code. This holistic approach aligns with how software development actually works—as a collaborative, creative endeavor that suffers when reduced to simple output counting.

To begin implementing space metrics in your organization:

Related topics worth exploring: dora metrics integration with the space framework DORA metrics essentially function as examples of Performance and Efficiency dimensions, AI-powered code review impact measurement, and developer experience optimization strategies.

.png)

Developer experience (DX) refers to how developers feel about the tools and platforms they use to build, test, and deliver software. Developer Experience (DX or DevEx) refers to the complete set of interactions developers have with tools, processes, workflows, and systems throughout the software development lifecycle. When engineering leaders invest in good DX, they directly impact code quality, deployment frequency, and team retention—making it a critical factor in software delivery success. Developer experience is important because it directly influences software development efficiency, drives innovation, and contributes to overall business success by enabling better productivity, faster time to market, and a competitive advantage.

This guide covers measurement frameworks, improvement strategies, and practical implementation approaches for engineering teams seeking to optimize how developers work. The target audience includes engineering leaders, VPs, directors, and platform teams responsible for developer productivity initiatives and development process optimization.

DX encompasses every touchpoint in a developer’s journey—from onboarding process efficiency and development environment setup to code review cycles and deployment pipelines. The developer's journey includes onboarding, environment setup, daily workflows, and collaboration, each of which impacts developer productivity, satisfaction, and overall experience. Organizations with good developer experience see faster lead time for changes, higher quality code, and developers who feel empowered rather than frustrated.

By the end of this guide, you will gain:

For example, streamlining the onboarding process by automating environment setup can reduce new developer time-to-productivity from weeks to just a few days, significantly improving overall DX.

Understanding and improving developer experience is essential for engineering leaders who want to drive productivity, retain top talent, and deliver high quality software at speed.

Developer experience defines how effectively developers can focus on writing high quality code rather than fighting tools and manual processes. It encompasses the work environment, toolchain quality, documentation access, and collaboration workflows that either accelerate or impede software development.

The relevance to engineering velocity is direct: when development teams encounter friction—whether from slow builds, unclear documentation, or fragmented systems—productivity drops and frustration rises. Good DX helps organizations ship new features faster while maintaining code quality and team satisfaction.

Development environment setup and toolchain integration form the foundation of the developer’s journey. This includes IDE configuration, package managers, local testing capabilities, and access to shared resources. When these elements work seamlessly, developers can begin contributing value within days rather than weeks during the onboarding process.

Code review processes and collaboration workflows determine how efficiently knowledge transfers across teams. Effective code review systems provide developers with timely feedback, maintain quality standards, and avoid becoming bottlenecks that slow deployment frequency.

Deployment pipelines and release management represent the final critical component. Self service deployment capabilities, automated testing, and reliable CI/CD systems directly impact how quickly code moves from development to production. These elements connect to broader engineering productivity goals by reducing the average time between commit and deployment.

With these fundamentals in mind, let's explore how to measure and assess developer experience using proven frameworks.