Engineering leaders are moving beyond dashboard tools to comprehensive Software Engineering Intelligence Platforms that unify delivery metrics, code-level insights, AI-origin code analysis, DevEx signals, and predictive operations in one analytical system. This article compares leading platforms, highlights gaps in the traditional analytics landscape, and introduces the capabilities required for 2026, where AI coding, agentic workflows, and complex delivery dynamics reshape how engineering organizations operate.

Software delivery has always been shaped by three forces: the speed of execution, the quality of the output, and the well-being of the people doing the work. In the AI era, each of those forces behaves differently. Teams ship faster but introduce more subtle defects. Code volume grows while review bandwidth stays fixed. Developers experience reduced cognitive load in some areas and increased load in others. Leaders face unprecedented complexity because delivery patterns no longer follow the linear relationships that pre-AI metrics were built to understand.

This is why Software Engineering Intelligence Platforms have become foundational. Modern engineering organizations can no longer rely on surface-level dashboards or simple rollups of Git and Jira events. They need systems that understand flow, quality, cognition, and AI-origin work at once. These systems must integrate deeply enough to see bottlenecks before they form, attribute delays to specific root causes, and expose how AI tools reshape engineering behavior. They must be able to bridge the code layer with the organizational layer, something that many legacy analytics tools were never designed for.

The platforms covered in this article represent different philosophies of engineering intelligence. Some focus on pipeline flow, some on business alignment, some on human factors, and some on code-level insight. Understanding their strengths and limitations helps leaders shape a strategy that fits the new realities of software development.

The category has evolved significantly. A platform worthy of this title must unify a broad set of signals into a coherent view that answers not just what happened but why it happened and what will likely happen next. Several foundational expectations now define the space.

Engineering organizations rely on a fragmented toolchain. A modern platform must unify Git, Jira, CI/CD, testing, code review, communication patterns, and developer experience telemetry. Without a unified model, insights remain shallow and reactive.

LLMs are not an enhancement; they are required. Modern platforms must use AI to classify work, interpret diffs, identify risk, summarize activity, reduce cognitive load, and surface anomalies that traditional heuristics miss.

Teams need models that can forecast delivery friction, capacity constraints, high-risk code, and sprint confidence. Forecasting is no longer a bonus feature but a baseline expectation.

Engineering performance cannot be separated from cognition. Context switching, review load, focus time, meeting pressure, and sentiment have measurable effects on throughput. Tools that ignore these variables produce misleading conclusions.

The value of intelligence lies in its ability to influence action. Software Engineering Intelligence Platforms must generate summaries, propose improvements, highlight risky work, assist in prioritization, and reduce the administrative weight on engineering managers.

As AI tools generate increasing percentages of code, platforms must distinguish human- from AI-origin work, measure rework, assess quality drift, and ensure that leadership has visibility into new risk surfaces.

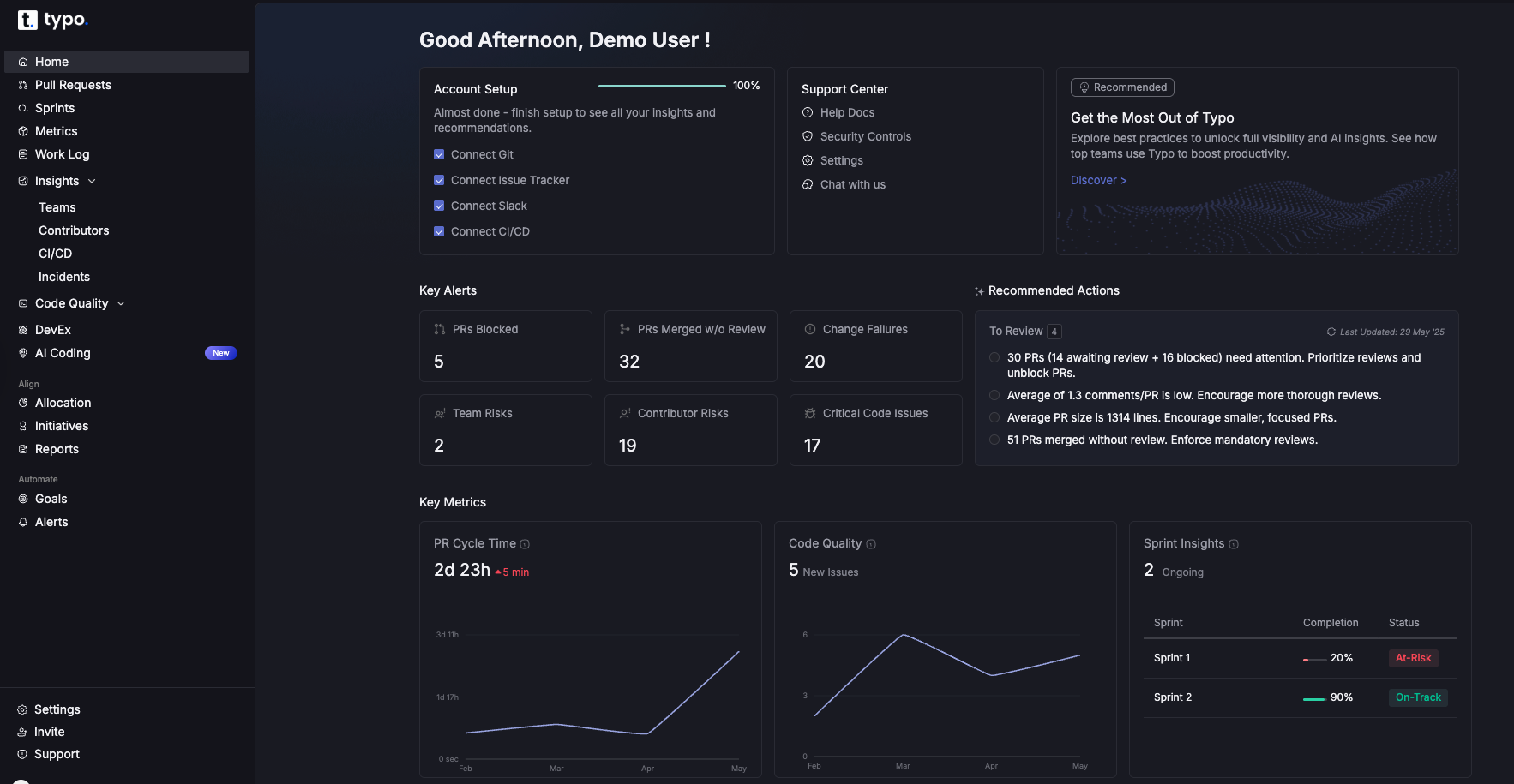

Typo represents a more bottom-up philosophy of engineering intelligence. Instead of starting with work categories and top-level delivery rollups, it begins at the code layer, where quality, risk, and velocity are actually shaped. This is increasingly necessary in an era where AI coding assistants produce large volumes of code that appear clean but carry hidden complexity.

Typo unifies DORA metrics, code review analytics, workflow data, and AI-origin signals into a predictive layer. It integrates directly with GitHub, Jira, and CI/CD systems, delivering actionable insights within hours of setup. Its semantic diff engine and LLM-powered reviewer provide contextual understanding of patterns that traditional tools cannot detect.

Typo measures how AI coding assistants influence velocity and quality, identifying rework trends, risk hotspots, and subtle stylistic inconsistencies introduced by AI-origin code. It exposes reviewer load, review noise, cognitive burden, and early indicators of technical debt. Beyond analytics, Typo automates operational work through agentic summaries of PRs, sprints, and 1:1 inputs.

In a landscape where velocity often increases before quality declines, Typo helps leaders see both sides of the equation, enabling balanced decision-making grounded in the realities of modern code production.

LinearB focuses heavily on development pipeline flow. Its strength lies in connecting Git, Jira, and CI/CD data to understand where work slows. It provides forecasting models for sprint delivery and uses WorkerB automation to nudge teams toward healthier behaviors, such as timely reviews and branch hygiene.

LinearB helps teams reduce cycle time and improve collaboration by identifying bottlenecks early. It excels at predicting sprint completion and maintaining execution flow. However, it offers limited depth at the code level. For teams dealing with AI-origin work, semantic drift, or subtle quality issues, LinearB’s surface-level metrics offer only partial visibility.

Its predictive models are valuable, but without granular understanding of code semantics or review complexity, they cannot fully explain why delays occur. Teams with increasing AI adoption often require additional layers of intelligence to understand rework and quality dynamics beyond what pipeline metrics alone can capture.

Jellyfish offers a top-down approach to engineering intelligence. It integrates data sources across the development lifecycle and aligns engineering work with business objectives. Its strength is organizational clarity: leaders can map resource allocation, capacity planning, team structure, and strategic initiatives in one place.

For executive reporting and budgeting, Jellyfish is often the preferred platform. Its privacy-focused individual performance analysis supports sensitive leadership conversations without becoming punitive. However, Jellyfish has limited depth at the code level. It does not analyze diffs, AI-origin signals, or semantic risk patterns.

In the AI era, business alignment alone cannot explain delivery friction. Leaders need bottom-up visibility into complexity, review behavior, and code quality to understand how business outcomes are influenced. Jellyfish excels at showing what work is being done but not the deeper why behind technical risks or delivery volatility.

Swarmia emphasizes long-term developer health and sustainable productivity. Its analytics connect output metrics with human factors such as focus time, meeting load, context switching, and burnout indicators. It prioritizes developer autonomy and lets individuals control their data visibility.

As engineering becomes more complex and AI-driven, Swarmia’s focus on cognitive load becomes increasingly important. Code volume rises, review frequency increases, and context switching accelerates when teams adopt AI tools. Understanding these pressures is crucial for maintaining stable throughput.

Swarmia is well suited for teams that want to build a healthy engineering culture. However, it lacks deep analysis of code semantics and AI-origin work. This limits its ability to explain how AI-driven rework or complexity affects well-being and performance over time.

Oobeya specializes in aligning engineering activity with business objectives. It provides OKR-linked insights, release predictability assessments, technical debt tracking, and metrics that reflect customer impact and reliability.

Oobeya helps leaders translate engineering work into business narratives that resonate with executives. It highlights maintainability concerns, risk profiles, and strategic impact. Its dashboards are designed for clarity and communication rather than deep technical diagnosis.

The challenge arises when strategic metrics disagree with on-the-ground delivery behavior. For organizations using AI coding tools, maintainability may decline even as output increases. Without code-level insights, Oobeya cannot fully reveal the sources of divergence.

DORA and SPACE remain foundational frameworks, but they were designed for human-centric development patterns. AI-origin code changes how teams work, what bottlenecks emerge, and how quality shifts over time. New extensions are required.

AI-adjusted metrics help leaders understand system behavior more accurately:

AI affects satisfaction, cognition, and productivity in nuanced ways:

These extensions help leaders build a comprehensive picture of engineering health that aligns with modern realities.

AI introduces benefits and risks that traditional engineering metrics cannot detect. Teams must observe:

AI-generated code may appear clean but hide subtle structural complexity.

LLMs generate syntactically correct but logically flawed code.

Different AI models produce different conventions, increasing entropy.

AI increases code output, which increases review load, often without corresponding quality gains.

Quality degradation may not appear immediately but compounds over time.

A Software Engineering Intelligence Platform must detect these risks through semantic analysis, pattern recognition, and diff-level intelligence.

Across modern engineering teams, several scenarios appear frequently:

Teams ship more code, but review queues grow, and defects increase.

Developers feel good about velocity, but AI-origin rework accumulates under the surface.

Review bottlenecks, not code issues, slow delivery.

Velocity metrics alone cannot explain why outcomes fall short; cognitive load and complexity often provide the missing context.

These patterns demonstrate why intelligence platforms must integrate code, cognition, and flow.

A mature platform requires:

The depth and reliability of this architecture differentiate simple dashboards from true Software Engineering Intelligence Platforms.

Metrics fail when they are used incorrectly. Common traps include:

Engineering is a systems problem. Individual metrics produce fear, not performance.

In the AI era, increased output often hides rework.

AI must be measured, not assumed to add value.

Code produced does not equal value delivered.

Insights require human interpretation.

Effective engineering intelligence focuses on system-level improvement, not individual performance.

Introducing a Software Engineering Intelligence Platform is an organizational change. Successful implementations follow a clear approach:

Communicate that metrics diagnose systems, not people.

Ensure teams define cycle time, throughput, and rework consistently.

Developers should understand how AI usage is measured and why.

Retrospectives, sprint planning, and 1:1s become richer with contextual data.

Agentic summaries, risk alerts, and reviewer insights accelerate alignment.

Leaders who follow these steps see faster adoption and fewer cultural barriers.

A simple but effective framework for modern organizations is:

Flow + Quality + Cognitive Load + AI Behavior = Sustainable Throughput

Flow represents system movement.

Quality represents long-term stability.

Cognitive load represents human capacity.

AI behavior represents complexity and rework patterns.

If any dimension deteriorates, throughput declines.

If all four align, delivery becomes predictable.

Typo contributes to this category through a deep coupling of code-level understanding, AI-origin analysis, review intelligence, and developer experience signals. Its semantic diff engine and hybrid LLM+static analysis framework reveal patterns invisible to workflow-only tools. It identifies review noise, reviewer bottlenecks, risk hotspots, rework cycles, and AI-driven complexity. It pairs these insights with operational automation such as PR summaries, sprint retrospectives, and contextual leader insights.

Most platforms excel at one dimension: flow, business alignment, or well-being. Typo aims to unify the three, enabling leaders to understand not just what is happening but why and how it connects to code, cognition, and future risk.

When choosing a platform, leaders should look for:

A wide integration surface is helpful, but depth of analysis determines reliability.

Platforms must detect, classify, and interpret AI-driven work.

Forecasts should meaningfully influence planning, not serve as approximations.

Developer experience is now a leading indicator of performance.

Insights must lead to decisions, not passive dashboards.

A strong platform enables engineering leaders to operate with clarity rather than intuition.

Engineering organizations are undergoing a profound shift. Speed is rising, complexity is increasing, AI-origin code is reshaping workflows, and cognitive load has become a measurable constraint. Traditional engineering analytics cannot keep pace with these changes. Software Engineering Intelligence Platforms fill this gap by unifying code, flow, quality, cognition, and AI signals into a single model that helps leaders understand and improve their systems.

The platforms in this article—Typo, LinearB, Jellyfish, Swarmia, and Oobeya—each offer valuable perspectives. Together, they show where the industry has been and where it is headed. The next generation of engineering intelligence will be defined by platforms that integrate deeply, understand code semantically, quantify AI behavior, protect developer well-being, and guide leaders through increasingly complex technical landscapes.

The engineering leaders who succeed in 2026 will be those who invest early in intelligence systems that reveal the truth of how their teams work and enable decisions grounded in clarity rather than guesswork.

A unified analytical system that integrates Git, Jira, CI/CD, code semantics, AI-origin signals, and DevEx telemetry to help engineering leaders understand delivery, quality, risk, cognition, and organizational behavior.

AI increases output but introduces hidden complexity and rework. Without AI-origin awareness, traditional metrics become misleading.

Yes, but they must be extended to reflect AI-driven code generation, rework, and review noise.

They reveal bottlenecks, predict risks, improve team alignment, reduce cognitive load, and support better planning and decision-making.

It depends on the priority: flow (LinearB), business alignment (Jellyfish), developer well-being (Swarmia), strategic clarity (Oobeya), or code-level AI-native intelligence (Typo).