This guide is designed for engineering leaders seeking to understand, evaluate, and select the best software engineering intelligence platforms to drive business outcomes and engineering efficiency. As the software development landscape evolves with the rise of AI, multi-agent workflows, and increasingly complex toolchains, choosing the right platform is critical for aligning engineering work with organizational objectives, improving delivery speed, and maximizing developer satisfaction. The impact of selecting the right software engineering intelligence platform extends beyond engineering teams—it directly influences business outcomes, operational efficiency, and the ability to innovate at scale.

A Software Engineering Intelligence (SEI) platform is an automated tool that aggregates, analyzes, and presents data and insights from the software development process. These platforms unify data from tools such as Git, Jira, CI/CD, code reviews, planning tools, and AI coding workflows to provide engineering leaders with a real-time, predictive understanding of delivery, quality, and developer experience. The best software engineering intelligence platforms synthesize data from tools that engineering teams are already using daily.

When choosing a software engineering intelligence platform, leaders should:

This guide will help leaders choose the right software engineering intelligence platform for their organization.

An engineering intelligence platform aggregates data from repositories, issue trackers, CI/CD, and communication tools. Data integration and data analytics are core to these platforms, enabling them to unify and analyze data from a variety of development tools. It produces strategic, automated insights across the software development lifecycle. These platforms act as business intelligence for engineering. They convert disparate signals into trend analysis, benchmarks, and prioritized recommendations.

Unlike point solutions, engineering intelligence platforms create a unified view of the development ecosystem. They automatically collect engineering metrics and relevant metrics, which are used to measure engineering effectiveness and engineering efficiency. These platforms detect patterns and surface actionable recommendations. CTOs, VPs of Engineering, and managers use these platforms for real-time decision support.

SEI platforms provide detailed analytics and metrics, offering a clear picture of engineering health, resource investment, operational efficiency, and progress towards strategic goals.

Software Engineering Intelligence platforms aggregate, analyze, and present data from the software development process.

Now that we've covered the general overview of engineering intelligence platforms, let's dive into the specifics of what a Software Engineering Intelligence Platform is and how it functions.

A Software Engineering Intelligence Platform unifies data from Git, Jira, CI/CD, reviews, planning tools, and AI coding workflows to give engineering leaders a real-time, predictive understanding of delivery, quality, and developer experience. A Software Engineering Intelligence (SEI) platform is an automated tool that aggregates, analyzes, and presents data and insights from the software development process. This guide will help leaders choose the right software engineering intelligence platform for their organization. These platforms leverage advanced tools for comprehensive analytics, data integration, and the ability to analyze data from a wide range of existing tools, enabling deep insights and seamless workflows. These capabilities help align engineering work with business goals by connecting engineering activities to organizational objectives and outcomes. The best software engineering intelligence platforms synthesize data from tools that engineering teams are already using daily.

Traditional dashboards and DORA-only tools no longer work in the AI era, where PR volume, rework, model unpredictability, and review noise have become dominant failure modes. Modern intelligence platforms must analyze diffs, detect AI-origin code behavior, forecast delivery risks, identify review bottlenecks, and explain why teams slow down, not just show charts. This guide outlines what the category should deliver in 2026, where competitors fall short, and how leaders can evaluate platforms with accuracy, depth, and time-to-value in mind.

A Software Engineering Intelligence (SEI) platform is an automated tool that aggregates, analyzes, and presents data and insights from the software development process. A Software Engineering Intelligence Platform is an integrated system that consolidates signals from code, reviews, releases, sprints, incidents, AI coding tools, and developer communication channels to provide a unified, real-time understanding of engineering performance. SEI platforms play a critical role in supporting business goals by aligning engineering work with organizational objectives, while also tracking project health and project progress to ensure teams stay on course and risks are identified early.

In 2026, the definition has evolved. Intelligence platforms now:

Competitors describe intelligence platforms in fragments (delivery, resources, or DevEx), but the market expectation has shifted. A true Software Engineering Intelligence Platform must give leaders visibility across the entire SDLC and the ability to act on those insights without manual interpretation.

Engineering intelligence platforms produce measurable outcomes. They improve delivery speed, code quality, and developer satisfaction. Core benefits include:

SEI platforms enable engineering leaders to manage teams based on data rather than instincts alone, providing detailed analytics and metrics that help in tracking progress towards strategic goals.

These platforms move engineering management from intuition to proactive, data-driven leadership. They enable optimization, prevent issues, and demonstrate development ROI clearly. Software engineering intelligence platforms aim to solve engineering's black box problem by illuminating the entire software delivery lifecycle.

Now that we've defined what an SEI platform is, let's explore why these platforms are essential in the modern engineering landscape.

The engineering landscape has shifted. AI-assisted development, multi-agent workflows, and code generation have introduced:

Traditional analytics frameworks cannot interpret these new signals. A 2026 Software Engineering Intelligence Platform must surface:

These are the gaps competitors struggle to interpret consistently, and they represent the new baseline for modern engineering intelligence.

As we understand the importance of these platforms, let's examine the key benefits they offer to engineering organizations.

Adopting an engineering intelligence platform delivers a transformative impact on the software development process. By centralizing data from across the software development lifecycle, these platforms empower software development teams to make smarter, faster decisions rooted in real-time, data-driven insights.

Software engineering intelligence tools elevate developer productivity by:

This not only enhances code quality but also fosters a culture of continuous improvement, where teams are equipped to adapt and optimize their development process in response to evolving business needs.

Collaboration is also strengthened, as engineering intelligence platforms break down silos between teams, stakeholders, and executives. By aligning everyone around shared engineering goals and business outcomes, organizations can ensure that engineering efforts are always in sync with strategic objectives.

Ultimately, leveraging an engineering intelligence platform drives operational efficiency, supports data-driven decision making, and enables engineering organizations to deliver high-quality software with greater confidence and speed.

With these benefits in mind, let's move on to the essential criteria for evaluating engineering intelligence platforms.

A best-in-class platform should score well across integrations, analytics, customization, AI features, collaboration, automation, and security. Key features to look for include:

The priority of each varies by organizational context.

Use a weighted scoring matrix that reflects your needs:

Include stakeholders across roles to ensure the platform meets both daily workflow and strategic visibility requirements. Compatibility with existing tools like Jira, GitHub, and GitLab is also crucial for ensuring efficient project management and seamless workflows.

Customization and extensibility are important features of SEI platforms, allowing teams to tailor dashboards and metrics to their specific needs.

With evaluation criteria established, let's review how modern platforms differ and what to look for in the competitive landscape.

The engineering intelligence category has matured, but platforms vary widely in depth and accuracy.

Common competitor gaps include:

Your blog benefits from explicitly addressing these gaps so that when buyers compare platforms, your article answers the questions competitors leave out.

Next, let's discuss the importance of integration with developer tools and workflows.

Seamless integrations are foundational. Data integration is critical for software engineering intelligence platforms, enabling them to unify data from various development tools, project management platforms, and collaboration tools to provide a comprehensive view of engineering activities and performance metrics. Platforms must aggregate data from:

Look for:

This cross-tool correlation enables sophisticated analyses that justify the investment. SEI platforms synthesize data from tools that engineering teams are already using daily, alleviating the burden of manually bringing together data from various platforms.

With integrations in place, let's examine the analytics capabilities that set leading platforms apart.

Real-time analytics surface current metrics (cycle time, deployment frequency, PR activity). Leaders can act immediately rather than relying on lagging reports. Comprehensive analytics and advanced data analytics are crucial for providing actionable insights into code quality, team performance, and process efficiency, enabling organizations to optimize their workflows. Predictive analytics use models to forecast delivery risks, resource constraints, and quality issues.

Contrast approaches:

Predictive analytics deliver preemptive insight into delivery risks and opportunities.

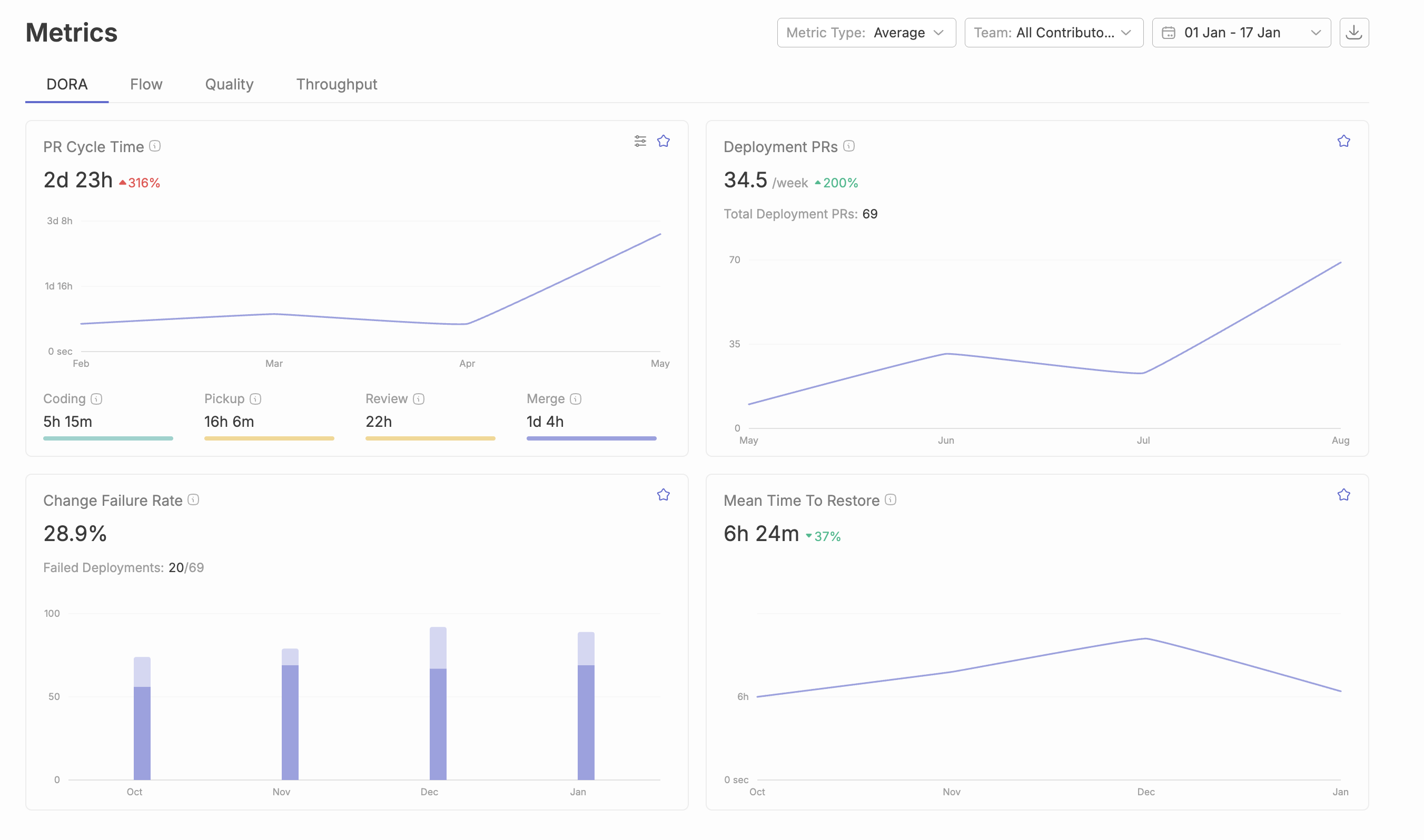

Comprehensive reporting capabilities, including support for DORA metrics, cycle time, and other key performance indicators, are essential for tracking the efficiency and effectiveness of your software engineering processes.

With analytics covered, let's look at how AI-native intelligence is becoming the new standard for SEI platforms.

This is where the competitive landscape is widening.

A Software Engineering Intelligence Platform in 2026 must leverage advanced tools and workflow automation to enhance engineering productivity, enabling teams to streamline processes, reduce manual work, and make data-driven decisions.

Key AI capabilities include:

AI coding assistants like GitHub Copilot and Tabnine use LLMs for real-time code generation and refactoring. These AI assistants can increase developer efficiency by up to 20% by offering real-time coding suggestions and automating repetitive tasks.

Most platforms today still rely on surface-level Git events. They do not understand code, model behavior, or multi-agent interactions. This is the defining gap for category leaders. Modern SEI platforms are evolving into autonomous development partners by using agentic AI to analyze repositories and provide predictive insights.

With AI-native intelligence in mind, let's explore how customizable dashboards and reporting support diverse stakeholder needs.

Dashboards must serve diverse roles. Engineering managers need team velocity and code-quality views. CTOs need strategic metrics tied to business outcomes. Individual contributors want personal workflow insights. Comprehensive analytics are essential to deliver detailed insights on code quality, team performance, and process efficiency, while the ability to focus on relevant metrics ensures each stakeholder can customize dashboards to highlight the KPIs that matter most to their goals.

Effective customization includes:

Balance standardization for consistent measurement with customization for role-specific relevance.

Next, let's see how AI-powered code insights and workflow optimization further enhance engineering outcomes.

AI features automate code reviews, detect code smells, and benchmark practices against industry data. Workflow automation and productivity metrics are leveraged to optimize engineering processes, streamline pull request management, and evaluate team effectiveness. They surface contextual recommendations for quality, security, and performance. Advanced platforms analyze commits, review feedback, and deployment outcomes to propose workflow changes.

Typo’s friction measurement for AI coding tools exemplifies research-backed methods to measure tool impact without disrupting workflows. AI-powered review and analysis speed delivery, improve code quality, and reduce manual review overhead.

Automated documentation tools generate API references and architecture summaries as code changes, reducing technical debt.

With workflow optimization in place, let's discuss how collaboration and communication features support distributed teams.

Integration with Slack, Teams, and meeting platforms consolidates context. Good platforms aggregate conversations and provide filtered alerts, automated summaries, and meeting recaps. Collaboration tools like Slack and Google Calendar play a crucial role in improving team productivity and team health by streamlining communication, supporting engagement, and enabling better tracking and compliance in software development workflows.

Key capabilities:

These features are particularly valuable for distributed or cross-functional teams. SEI platforms enable engineering leaders to make data-informed decisions that will drive positive business outcomes and foster consistent collaboration between teams throughout the CI/CD process.

With collaboration features established, let's look at how automation and process streamlining further improve efficiency.

Automation reduces manual work and enforces consistency. Workflow automation and advanced tools play a key role in improving engineering efficiency by streamlining repetitive tasks, reducing context switching, and enabling teams to focus on high-value work. Programmable workflows handle reporting, reminders, and metric tracking. Effective automation accelerates handoffs, flags incomplete work, and optimizes PR review cycles.

High-impact automations include:

The best automation is unobtrusive yet improves reliability and efficiency.

Real-time alerts for risky work such as large pull requests help reduce the change failure rate. Real-time analytics from software engineering intelligence platforms identify risky work patterns or reviewer overload before they delay releases.

With automation in place, let's address the critical requirements for security, compliance, and data privacy.

Enterprise adoption demands robust security, compliance, and privacy. Look for:

Evaluate data retention, anonymization options, user consent controls, and geographic residency support. Strong compliance capabilities are expected in enterprise-grade platforms. Assess against your regulatory and risk profile.

With security and compliance covered, let's explore how data-driven decision making is enabled by SEI platforms.

Data-driven decision making is at the heart of high-performing software development teams, and engineering intelligence platforms are the catalysts that make it possible. By aggregating and analyzing data from version control systems, project management tools, and communication tools, these platforms provide engineering leaders with a holistic view of team performance, productivity, and operational efficiency.

Engineering intelligence platforms leverage machine learning and predictive analysis to forecast potential risks and opportunities, enabling teams to proactively address challenges and capitalize on strengths. This approach ensures that every decision is backed by quantitative and qualitative data, reducing guesswork and aligning engineering processes with business outcomes.

With data-driven decision making, software development teams can continuously refine their workflows, improve software quality, and achieve greater alignment with organizational goals. The result is a more agile, efficient, and resilient engineering organization that is equipped to thrive in a rapidly changing software development landscape.

Now, let's discuss how to align platform selection with your organization's goals.

Align platform selection with business strategy through a structured, stakeholder-inclusive process. This maximizes ROI and adoption. It is essential to ensure the platform aligns with business goals and helps optimize engineering investments by demonstrating how engineering work supports organizational objectives and delivers measurable value.

Recommended steps:

Software engineering intelligence platforms provide real-time insights that empower teams to make swift, informed decisions and remain agile in response to evolving project demands.

With alignment strategies in place, let's move on to measuring the impact of your chosen platform.

Track metrics that link development activity to business outcomes. Prove platform value to executives. Engineering metrics, productivity metrics, workflow metrics, and quantitative data are essential for measuring the impact of engineering work, identifying inefficiencies, and driving continuous improvement. Core measurements include:

Measure leading indicators alongside lagging indicators. Tie metrics to customer satisfaction, revenue impact, or competitive advantage. Typo’s ROI approach links delivery improvements with developer NPS to show comprehensive value. Software engineering intelligence platforms provide detailed analytics and metrics, offering a clear picture of engineering health, resource investment, operational efficiency, and progress towards strategic goals.

Next, let's look at the unique metrics that only a software engineering intelligence platform can provide.

Traditional SDLC metrics aren’t enough. To truly measure and improve engineering effectiveness and engineering efficiency, software engineering intelligence platforms leverage advanced tools and data analytics to surface deeper insights. Intelligence platforms must surface deeper metrics such as:

Competitor blogs rarely cover these metrics, even though they define modern engineering performance.

SEI platforms provide a comprehensive view of software engineering processes, enabling teams to enhance efficiency and enforce quality throughout the development process.

With metrics in mind, let's discuss implementation considerations and how to achieve time to value.

Plan implementation with realistic timelines and a phased rollout. Demonstrate quick wins while building toward full adoption.

Typical timeline:

Expect initial analytics and workflow improvements within weeks. Significant productivity and cultural shifts take months.

Prerequisites:

Start small—pilot with one team or a specific metric. Prove value, then expand. Prioritize developer experience and workflow fit over exhaustive feature activation.

With implementation planned, let's define what a full-featured software engineering intelligence platform should provide.

Before exploring vendors, leaders should establish a clear definition of what “complete” intelligence looks like. This should include the need for advanced tools, comprehensive analytics, robust data integration, and the ability to analyze data from various sources to deliver actionable insights.

A comprehensive platform should provide:

Advanced SEI platforms also leverage predictive analytics to forecast potential challenges and suggest ways to optimize resource allocation.

With a clear definition in place, let's see how Typo approaches engineering intelligence.

Typo positions itself as an AI-native engineering intelligence platform for leaders at high-growth software companies. It aggregates real-time SDLC data, applies LLM-powered code and workflow analysis, and benchmarks performance to produce actionable insights tied to business outcomes. Typo is also an engineering management platform, providing comprehensive visibility, data-driven decision-making, and alignment between engineering teams and business objectives.

Other notable software engineering intelligence platforms in the space include:

Typo’s friction measurement for AI coding tools is research-backed and survey-free. Organizations can measure effects of tools like GitHub Copilot without interrupting developer workflows. The platform emphasizes developer-first onboarding to drive adoption while delivering executive visibility and measurable ROI from the first week.

Key differentiators include deep toolchain integrations, advanced AI insights beyond traditional metrics, and a focus on both developer experience and delivery performance.

With an understanding of Typo's approach, let's discuss how to evaluate SEI platforms during a trial.

Most leaders underutilize trial periods. A structured evaluation helps reveal real strengths and weaknesses.

During a trial, validate:

It's essential that software engineering intelligence platforms can analyze data from various sources and track relevant metrics that align with your team's goals.

A Software Engineering Intelligence Platform must prove its intelligence during the trial, not only after a long implementation. The best SEI platforms synthesize data from existing tools that engineering teams are already using, alleviating the burden of manual data collection.

With trial evaluation strategies in place, let's address some frequently asked questions.

What features should leaders prioritize in an engineering intelligence platform?

Leaders should prioritize key features such as real-time analytics, seamless integrations with existing tools like Jira, GitHub, and GitLab, and robust compatibility with project management platforms. Look for collaboration tools integration (e.g., Slack, Google Calendar) to enhance communication and compliance, as well as workflow automation to reduce manual work and streamline processes. AI-driven insights, customizable dashboards for different stakeholders, enterprise-grade security and compliance, plus collaboration and automation capabilities are essential to boost team efficiency.

How do I assess integration needs for my existing development stack?

Inventory your primary tools (repos, CI/CD, PM, communication). Prioritize platforms offering turnkey connectors for those systems. Verify bi-directional sync and unified analytics across the stack.

What is the typical timeline for seeing operational improvements after deployment?

Teams often see actionable analytics and workflow improvements within weeks. Major productivity gains appear in two months. Broader ROI and cultural change develop over several months.

How can engineering intelligence platforms improve developer experience without micromanagement?

Effective platforms focus on team-level insights and workflow friction, not individual surveillance. They enable process improvements and tools that remove blockers while preserving developer autonomy.

What role does AI play in modern engineering intelligence solutions?

AI drives predictive alerts, automated code review and quality checks, workflow optimization recommendations, and objective measurement of tool effectiveness. It enables deeper, less manual insight into productivity and quality.

With these FAQs addressed, let's conclude with a summary of why SEI platforms are indispensable for modern engineering teams.

In summary, software engineering intelligence platforms have become indispensable for modern engineering teams aiming to optimize their software development processes and achieve superior business outcomes. By delivering data-driven insights, enhancing collaboration, and enabling predictive analysis, these platforms empower engineering leaders to drive continuous improvement, boost developer productivity, and elevate software quality.

As the demands of software engineering continue to evolve, the role of software engineering intelligence tools will only become more critical. Modern engineering teams that embrace these platforms gain a competitive edge, leveraging actionable data to navigate complexity and deliver high-quality software efficiently. By adopting a data-driven approach to decision making and harnessing the full capabilities of engineering intelligence, organizations can unlock new levels of performance, innovation, and success in the software development arena.